30-Day Proven AI Voice Fraud and Deepfake Payments Defense

AI voice fraud and deepfake payments are no longer “future risks” — they are in live incident logs for finance and healthcare today. Deepfake video and voice scams have already driven multi-million-dollar wire transfers off a single “urgent” call or conference.

At Pentest Testing Corp, we’re seeing AI voice fraud and deepfake payments converge at a dangerous front-office layer: call centers, billing hotlines, pharmacy helpdesks, and finance shared services. Attackers don’t need to hack your core banking or EHR first — they just need a believable cloned voice, a plausible story, and a weak process.

This guide gives you a 30-day, fix-first sprint to harden that layer against AI voice fraud and deepfake payments, with concrete steps for:

- Finance teams (wire changes, refunds, account updates)

- Healthcare teams (prescription changes, record access, telehealth identity)

- Compliance teams (HIPAA, PCI DSS 4.0, SOC 2, ISO 27001, GDPR DPIAs)

You’ll see how to encode high-risk call flows as data, enforce multi-channel verification, simulate AI vishing attacks safely, and ship audit-ready evidence that reuses the same sprint across multiple frameworks.

Why AI Voice Fraud and Deepfake Payments Exploded in 2025–2026

Several trends converged to make AI voice fraud and deepfake payments a board-level risk:

- Commodity voice cloning. Modern tools can clone a recognizable voice from just a few seconds of reasonably clean audio — the kind you’ll find in earnings calls, webinars, podcasts, or YouTube interviews.

- High-impact case studies. Deepfake video and audio scams have pushed staff to authorize large wire transfers, including notable multi-million-dollar incidents that started from a single “urgent” call.

- Contact-center dependency. Finance and healthcare organizations route more critical decisions through remote channels: call centers, outsourced billing, and telehealth. That moves risk from internal desks to phone headsets.

- Rising synthetic voice fraud in insurance and healthcare. Synthetic voice attacks against insurers alone have surged (one study noted a 475% increase in such attacks in a year), highlighting how quickly attackers adapt to new channels.

- Law-enforcement warnings. Agencies are warning about AI-generated kidnapping calls and government-official impersonation using synthetic voices, making it harder for staff and the public to distinguish real from fake.

The lesson: you can’t train people to “hear” the difference anymore. You have to assume AI voice fraud and deepfake payments are viable against your organization and build verification and evidence around that assumption.

Step 1 (Days 1–7): Identify High-Risk AI Voice & Payment Request Flows

The 30-day defense sprint starts with mapping your “high-risk request” flows — the places where a single call, chat, or ticket can move money or patient safety.

Finance: Typical AI Voice Fraud & Deepfake Payment Targets

Focus first on flows where a cloned CFO, vendor, or customer could convincingly push staff to move funds:

- Supplier/vendor bank detail changes

- Urgent, confidential wire or SWIFT transfers

- Large refund approvals or charge-back overrides

- Creation of new payees or beneficiaries

- Changes to payout methods (e.g., card → bank transfer)

- Over-the-phone “exceptions” to standard KYC or AML checks

Healthcare: High-Risk Flows for Deepfake Voice & Prescription Changes

In healthcare environments, AI voice fraud can directly hit patient safety and PHI:

- Prescription changes or refill overrides over the phone

- Verbal orders (e.g., “telephone orders” for medications or tests)

- Patient identity verification for telehealth intake

- Requests to email or fax medical records to “new” addresses

- Insurance policy or payment detail changes driven by a phone call

Model High-Risk Flows as Data (Not Just Diagrams)

Instead of burying this in a PowerPoint, encode high-risk flows as structured data your security, fraud, and engineering teams can reuse.

Example high_risk_flows.yaml:

# high_risk_flows.yaml

- id: FIN-WIRE-001

channel: phone

function: "AP / Treasury"

flow_name: "Change supplier bank details"

business_unit: "Finance"

must_verify: true

verification_methods:

- callback_to_registered_number

- out_of_band_otp

- dual_approval_in_erp

max_single_amount: 250000

evidence_tags: ["PCI", "SOX", "fraud"]

notes: "Only accept requests referencing open POs or signed contracts."

- id: HC-PRESCR-001

channel: phone

function: "Clinic / Pharmacy"

flow_name: "Modify high-risk prescription"

business_unit: "Healthcare"

must_verify: true

verification_methods:

- provider_callback_to_known_number

- patient_record_cross_check

- script_phrase_challenge

evidence_tags: ["HIPAA", "safety"]

notes: "No changes solely based on a call from an unknown number."Now wire that into a simple decision helper:

from dataclasses import dataclass

from typing import List, Dict

import yaml

@dataclass

class HighRiskFlow:

id: str

channel: str

flow_name: str

must_verify: bool

verification_methods: List[str]

evidence_tags: List[str]

def load_flows(path: str = "high_risk_flows.yaml") -> List[HighRiskFlow]:

with open(path, "r", encoding="utf-8") as f:

raw = yaml.safe_load(f)

return [HighRiskFlow(**item) for item in raw]

def classify_request(event: Dict, flows: List[HighRiskFlow]) -> Dict:

"""

event: {

"channel": "phone",

"flow_name": "Change supplier bank details",

"amount": 120000,

"agent_id": "AGT-123",

}

"""

for flow in flows:

if flow.channel == event["channel"] and flow.flow_name == event["flow_name"]:

return {

"flow_id": flow.id,

"must_verify": flow.must_verify,

"verification_methods": flow.verification_methods,

"evidence_tags": flow.evidence_tags,

}

return {

"flow_id": None,

"must_verify": False,

"verification_methods": [],

"evidence_tags": [],

}

if __name__ == "__main__":

flows = load_flows()

event = {

"channel": "phone",

"flow_name": "Change supplier bank details",

"amount": 120000,

"agent_id": "AGT-123",

}

decision = classify_request(event, flows)

print(decision)Deliverables for Days 1–7

- A reviewed

high_risk_flows.yamlcovering finance and healthcare calls. - A lightweight classifier (like above) integrated into your call-handling or case-management system.

- Each flow is tied to your Risk Assessment register with explicit risks (“AI voice fraud and deepfake payments on vendor bank changes”) and owners — this maps directly into your Risk Assessment Services for HIPAA, PCI DSS, SOC 2, ISO 27001 and GDPR.

Step 2 (Days 8–15): Implement Multi-Channel Verification for High-Risk Flows

Once you know where AI voice fraud and deepfake payments can hit, you upgrade verification for those flows — not every interaction.

For each must_verify: true flow, enforce a combination of:

- Call-back to a registered number on file, not the one that just called.

- Out-of-band OTP sent to a previously verified contact method.

- Dual approval in your ERP, banking, or prescribing system.

- Known-device or known-channel checks (e.g., approvals only from enrolled SSO accounts or secure portals).

Example: Verification Policy as Code

You can combine high-risk flow metadata with a simple policy engine:

VERIFICATION_POLICY = {

"callback_to_registered_number": {

"required": True,

"timeout_seconds": 1800,

},

"out_of_band_otp": {

"required": True,

"min_length": 6,

"max_attempts": 3,

},

"dual_approval_in_erp": {

"required": True,

"min_approvers": 2,

},

}

def enforce_policy(decision: Dict, event: Dict) -> Dict:

"""

decision: output from classify_request()

event: enriched with call metadata, customer account id, etc.

"""

required = []

for method in decision["verification_methods"]:

policy = VERIFICATION_POLICY.get(method)

if policy and policy["required"]:

required.append({ "method": method, "policy": policy })

return {

"flow_id": decision["flow_id"],

"required_checks": required,

"can_auto_approve": not required,

}Example: OTP Verification + Evidence Logging

Treat verification events as compliance evidence, not just fraud controls:

from datetime import datetime

import json

from pathlib import Path

from typing import Dict

EVIDENCE_DIR = Path("evidence/voice_fraud")

def log_verification_result(flow_id: str, event_id: str, result: Dict) -> None:

EVIDENCE_DIR.mkdir(parents=True, exist_ok=True)

record = {

"ts": datetime.utcnow().isoformat() + "Z",

"flow_id": flow_id,

"event_id": event_id,

"agent_id": result["agent_id"],

"verification_methods": result["verification_methods"],

"status": result["status"], # "passed" | "failed" | "abandoned"

"notes": result.get("notes", ""),

}

with (EVIDENCE_DIR / f"{event_id}.json").open("w", encoding="utf-8") as f:

json.dump(record, f, indent=2)

def verify_otp(submitted: str, expected: str) -> bool:

return submitted == expected

# Example usage inside your call-handling app:

def handle_high_risk_request(event: Dict, expected_otp: str, submitted_otp: str):

ok = verify_otp(submitted_otp, expected_otp)

status = "passed" if ok else "failed"

result = {

"agent_id": event["agent_id"],

"verification_methods": ["out_of_band_otp"],

"status": status,

"notes": "OTP via SMS to registered billing contact",

}

log_verification_result(event["flow_id"], event["event_id"], result)

return okDeliverables for Days 8–15

- Verification policies enforced in your telephony/CRM stack for all

must_verifyflows. - Logs and JSON evidence showing which verification methods were used per high-risk event, ready to drop into PCI DSS 4.0, SOC 2, ISO 27001, and HIPAA audit binders.

- Exceptions process with explicit approvals for rare “break glass” scenarios.

Step 3 (Days 16–25): Train Staff and Run AI Vishing / Deepfake Simulations

Technology alone won’t stop AI voice fraud and deepfake payments. Your agents, pharmacists, and finance staff need muscle memory for saying “no” — even when the voice sounds exactly like an executive or physician.

Build Simple, Scripted Playbooks

For each high-risk flow, write two scripts:

- Attack script – what an AI voice fraudster might say.

- Defense script – how your agent should respond, including verification steps.

Example defense script (for vendor bank change):

Agent: "Thanks for calling. Because this is a bank detail change,

I’m required to verify this request using the contact details we have

on file — not the number you’re calling from.

I’ll end this call now and call you back on the registered number

in our vendor master. We’ll complete the change only after that

verification and a second approval in our system."Run Safe AI Voice Fraud Simulations (Red-Team Style)

You can run controlled simulations without teaching anyone how to abuse AI voice tools:

- Use your internal red-team or a trusted pentest partner to place scripted calls that mimic AI voice fraud patterns (urgent tone, spoofed caller ID, high-value requests).

- Use pre-recorded or synthetic voices approved by legal/compliance; keep scenarios focused on process, not on tricking staff with specific tools.

- Log how often agents:

- Follow verification playbooks

- Escalate suspicious requests

- Bypass controls “just this once”

Example: capturing simulation outcomes as data:

from dataclasses import dataclass, asdict

from datetime import datetime

import json

from pathlib import Path

SIM_DIR = Path("evidence/voice_fraud_sims")

@dataclass

class SimulationResult:

sim_id: str

flow_id: str

agent_id: str

outcome: str # "blocked", "escalated", "bypassed"

reasons: str

ts: str

def record_simulation(sim_id: str, flow_id: str, agent_id: str,

outcome: str, reasons: str) -> None:

SIM_DIR.mkdir(parents=True, exist_ok=True)

result = SimulationResult(

sim_id=sim_id,

flow_id=flow_id,

agent_id=agent_id,

outcome=outcome,

reasons=reasons,

ts=datetime.utcnow().isoformat() + "Z",

)

with (SIM_DIR / f"{sim_id}.json").open("w", encoding="utf-8") as f:

json.dump(asdict(result), f, indent=2)Deliverables for Days 16–25

- Documented playbooks and scripts for all high-risk flows.

- At least one simulation cycle per high-risk flow, with evidence of outcomes.

- Training updates and short refreshers for staff, based on where simulations revealed process gaps.

Step 4 (Days 26–30): Retest, Close Gaps, and Attach Evidence to Audits

The last week is about closing the loop and turning AI voice fraud and deepfake payments defenses into repeatable governance:

- Re-run simulations for flows that failed the first time; confirm that verification and escalation behaviors improved.

- Export evidence from your telephony, CRM, and verification systems:

- Call-back logs

- OTP verification logs

- Dual-approval records

- Simulation result JSON from your red-team drills

- Map each piece of evidence to your control framework(s):

- HIPAA: risk analysis, workforce training, person/entity authentication

- PCI DSS 4.0: authentication, anti-phishing training, incident response, logging

- SOC 2: CC6 (access control), CC7 (change and incident detection), CC9 (risk)

- ISO 27001: Annex A access control, communications security, operations security

- GDPR DPIAs: safeguards for fraud, identity, and payment-related processing

You can script a simple evidence index for your auditors:

from pathlib import Path

import json

def build_evidence_index(evidence_root: str = "evidence") -> None:

root = Path(evidence_root)

index = []

for path in root.rglob("*.json"):

rel = path.relative_to(root).as_posix()

with path.open("r", encoding="utf-8") as f:

try:

data = json.load(f)

except Exception:

data = {}

index.append({

"file": rel,

"flow_id": data.get("flow_id"),

"tags": data.get("evidence_tags", []),

})

with (root / "index.json").open("w", encoding="utf-8") as f:

json.dump(index, f, indent=2)

if __name__ == "__main__":

build_evidence_index()Deliverables for Days 26–30

- Documented before/after state of high-risk flows.

- Evidence index that can be reused across HIPAA, PCI DSS 4.0, SOC 2, ISO 27001, and GDPR DPIAs without re-running the sprint from scratch.

- A short executive summary explaining the 30-day AI voice fraud and deepfake payments sprint and its impact.

How This 30-Day Sprint Maps to HIPAA, PCI DSS 4.0, SOC 2, ISO 27001 & GDPR

Rather than invent new processes for each audit, reuse the same AI voice fraud sprint across frameworks:

- HIPAA (Security Rule)

- Risk analysis & management: high-risk call flows documented in your risk register.

- Person / entity authentication: multi-channel verification before acting on voice-only requests.

- Workforce training: simulation results and updated playbooks as training evidence.

- PCI DSS 4.0

- Social-engineering and phishing resilience for staff handling cardholder-related payments.

- Strong authentication and approval flows for card-related refunds, charge-backs, and payouts.

- Logging and monitoring of high-risk financial operations via telephony and portals.

- SOC 2

- CC6 (Access control): dual-approval and verification rules for high-risk changes and payments.

- CC7 (Monitoring): simulation logs and fraud detection events feeding monitoring processes.

- CC9 (Risk): documented AI voice fraud and deepfake payments risks tied to mitigation actions.

- ISO 27001

- Annex A controls for access control (A.5/A.8), operations security, and supplier relationships.

- Risk treatment plans referencing your 30-day sprint as a concrete mitigation.

- GDPR & DPIAs

- Article 32 security of processing: safeguards against impersonation and payment fraud involving personal data.

- DPIA records including high-risk flows where synthetic voice could lead to identity theft or financial loss.

Using Pentest Testing Corp’s Free Security Tools in Your Sprint

Even though this sprint is focused on AI voice fraud and deepfake payments, the same attackers often pair voice scams with web or API weaknesses (e.g., getting an agent to log into a vulnerable portal and push a payment).

That’s where our Free Website Vulnerability Scanner helps:

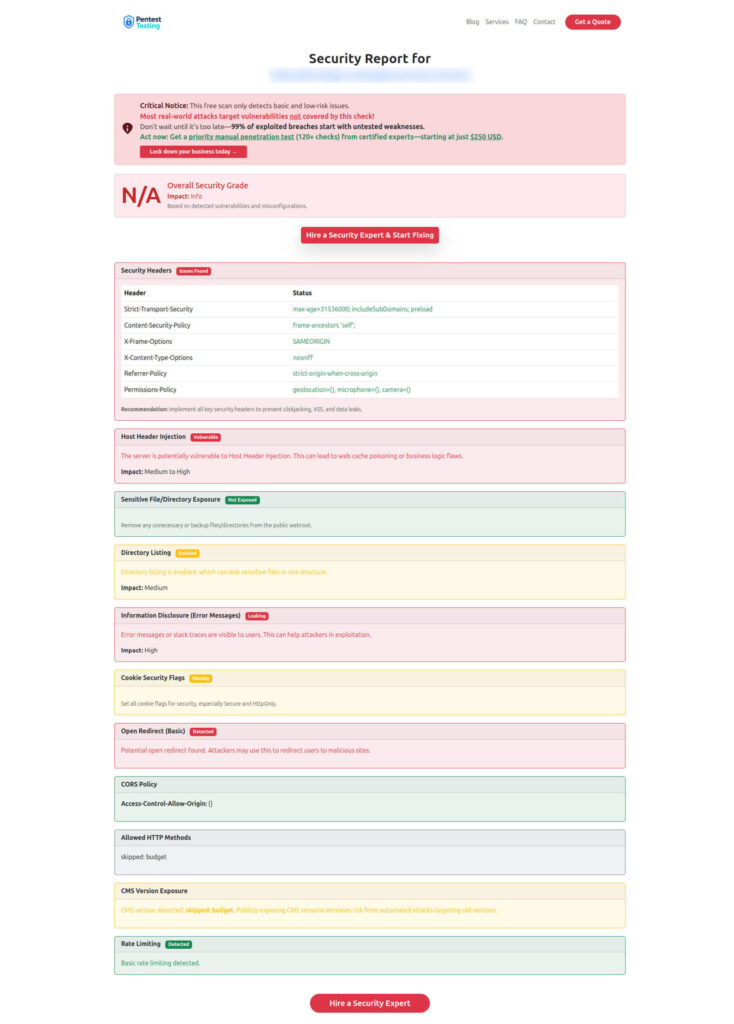

- Quickly scan your payment portals, vendor portals, and patient portals for missing HTTP security headers, exposed files, weak cookies, and other common issues.

- Use scan results to harden the web surface that agents rely on while handling high-risk calls.

- Attach before/after scan reports as technical evidence alongside your call-flow evidence.

To support the screenshots you’ll add to this article:

Below: Free tools webpage where you can access our Website Vulnerability Scanner and other security assessment tools to support your AI voice fraud and deepfake payments defense sprint.

Below: Sample vulnerability assessment report generated with our free tool to check Website Vulnerability, highlighting missing security headers and exposed resources.

Where Pentest Testing Corp Fits in Your AI Voice Fraud Program

This 30-day AI voice fraud and deepfake payments defense sprint is designed so internal teams can start immediately. When you need outside help, Pentest Testing Corp can plug in at multiple layers:

- AI Application Cybersecurity – Assess AI-driven helpdesks, fraud engines, and decision systems that sit behind your contact centers.

- Web, API, and Cloud Penetration Testing – Validate real-world exploitability of the portals and APIs your agents use during high-risk calls.

- Risk Assessment Services (HIPAA, PCI DSS, SOC 2, ISO 27001, GDPR) – Frame AI voice fraud and deepfake payments explicitly in your risk register and DPIAs, with prioritized treatment plans.

- Remediation Services – Convert identified gaps into a time-boxed remediation sprint, then implement technical, policy, and training fixes with audit-ready documentation.

- PTaaS (Pentest Testing as a Service) – Keep verification flows, portals, and APIs under continuous, on-demand testing as attackers evolve.

Looking for a practical 30-day plan to tighten tenant isolation and reduce cross-tenant risk in your SaaS? Check out our latest guide, “30-Day Multi-Tenant SaaS Breach Containment Blueprint”: https://www.pentesttesting.com/multi-tenant-saas-breach-containment/ — we map real tenant boundaries, harden RBAC, and share code-level examples you can apply immediately.

If you’re ready to move from concern to action, this 30-day sprint gives you a practical, auditable path to defend against AI voice fraud and deepfake payments — and Pentest Testing Corp can help you execute it with real-world pentesting and compliance expertise.

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about AI Voice Fraud and Deepfake Payments Defense.