7 Proven Steps for a HIPAA AI Risk Assessment Sprint

HIPAA + AI in 2025: how to run a risk assessment and remediation sprint for clinical AI projects.

Clinical AI is now everywhere: triage chatbots, diagnostic support, ambient scribing, revenue cycle automation, virtual care. Most of these touch PHI or sit one API call away from it.

What hasn’t kept up is the HIPAA AI risk assessment process. Many security and compliance teams still treat AI like just another web app, even when:

- PHI is passed into external LLMs,

- models are trained on real patient data,

- or AI output is used for clinical decisions.

This guide is written for CISOs and risk leaders who want a 30–60 day, fix-first HIPAA AI risk assessment and remediation sprint that produces audit-ready evidence, not just a stack of findings.

We’ll show how to:

- Inventory AI use cases that touch PHI,

- Run a HIPAA AI risk assessment that maps to the Security Rule,

- Turn gaps into a time-boxed remediation sprint, and

- Plug directly into Pentest Testing Corp’s Risk Assessment and Remediation services when you need help closing the loop.

If you’re designing or reviewing your AI security program, don’t miss our deep dive on 7 Proven AI Red Teaming Steps Auditors Trust, where we turn real LLM attack scenarios into audit-ready evidence for NIS2, EU AI Act, SOC 2, and HIPAA.

TL;DR: Your 30–60 Day HIPAA AI Sprint

- Scope: Define what counts as AI, PHI, and “in scope” systems.

- Inventory: Build a living catalog of AI use cases and systems.

- Map Data Flows: Trace PHI from source to model to logs.

- Assess Risk: Run a HIPAA AI risk assessment per use case with simple, explainable scoring.

- Plan a Sprint: Turn risks into a 30–60 day remediation backlog.

- Implement Controls: Ship high-impact Security Rule safeguards (access, logging, encryption, vendor controls) with real code.

- Package Evidence: Build an audit-ready binder and plug into your broader risk assessment + remediation programs.

We’ll include practical snippets (Python, SQL, YAML, infra-as-code) you can adapt in real clinical AI environments.

1. Why HIPAA + AI in 2025 Needs Its Own Risk Lens

HIPAA is technology-neutral, but the Security Rule requires you to:

- Perform an accurate and thorough risk analysis of systems handling ePHI,

- Implement risk management,

- Control access, integrity, and transmission security, and

- Review information system activity (logs, monitoring).

In 2025, that explicitly includes:

- AI-enabled clinical decision support

- AI-powered documentation tools integrated with EHRs

- Patient-facing chatbots that collect symptoms, insurance, demographics

- Background AI services for coding, billing, or fraud detection

A HIPAA AI risk assessment should answer:

- Where does PHI go before, during, and after AI processing?

- Which models and vendors can “see” PHI?

- What would an OCR investigator or auditor expect to see as evidence?

This guide plugs directly into your broader Risk Assessment Services and Remediation Services programs so AI isn’t a one-off project—it becomes part of your unified risk register and fix-first workflow.

2. Step 1 – Build an AI Inventory That Actually Touches PHI

Start by defining what “AI” means in your environment:

- Hosted LLM APIs (e.g., vendor GPT-style services),

- In-house ML models (imaging, NLP, risk scoring),

- Third-party SaaS with embedded AI features,

- RPA/automation that calls AI under the hood.

Create a single inventory of AI use cases that may touch PHI.

Example AI use-case inventory (YAML):

- id: AI-CLN-001

name: ED triage chatbot

workflow: Emergency intake on patient portal

phi_inputs: [symptoms, free_text_notes, date_of_birth, MRN]

model_hosting: external_llm

vendor: "LLM-Provider-X"

baa_signed: true

environments: [prod, staging]

owner: "Director, Emergency Medicine"

system_of_record: "Epic EHR"

- id: AI-DOC-002

name: Ambient clinical scribe

workflow: In-room note generation for outpatient visits

phi_inputs: [audio, transcript, ICD_codes, medications]

model_hosting: internal_ml

vendor: null

baa_signed: n/a

environments: [pilot-prod]

owner: "CMIO"You can generate a first pass by scanning config and repos for AI hints.

Quick Python helper to find AI usage in your repos:

import pathlib

import re

AI_HINTS = [

r"openai\.ChatCompletion",

r"llm_client\.",

r"vertexai\.generative",

r"ai_endpoint",

r"/v1/chat/completions",

]

def find_ai_usage(root: str = "."):

root_path = pathlib.Path(root)

hits = []

for path in root_path.rglob("*.*"):

if path.suffix not in {".py", ".js", ".ts", ".java"}:

continue

text = path.read_text(encoding="utf-8", errors="ignore")

for pattern in AI_HINTS:

if re.search(pattern, text):

hits.append((path, pattern))

break

return hits

for file_path, pattern in find_ai_usage("."):

print(f"[AI] {file_path} (matched: {pattern})")Review the output with engineering leads and clinical owners to confirm which AI features actually touch PHI.

3. Step 2 – Map PHI Data Flows for Each AI Use Case

Once you have the inventory, map end-to-end PHI flows for each AI use case:

- Source systems (EHR, PACS, LIS, CRM, portal)

- Pre-processing (ETL, de-identification, tokenization)

- AI engine (internal model vs vendor API)

- Post-processing (EHR updates, clinician UI, billing systems)

- Logging, observability, backups, and data lakes

Represent each AI PHI flow as code so you can keep it current.

Simple Python model for AI PHI data flows:

from dataclasses import dataclass, field

from typing import List

@dataclass

class PHINode:

id: str

type: str # ehr_db, ai_api, data_lake, portal, log_store, etc.

contains_phi: bool

region: str # e.g. "us-east-1"

vendor: str | None = None

@dataclass

class PHIDataFlow:

use_case_id: str

path: List[PHINode] = field(default_factory=list)

def summary(self):

chain = " -> ".join(n.id for n in self.path)

phi_nodes = [n.id for n in self.path if n.contains_phi]

return {

"use_case": self.use_case_id,

"path": chain,

"phi_nodes": phi_nodes,

}

ehr = PHINode(id="EHR-DB", type="ehr_db", contains_phi=True, region="us-east-1")

api = PHINode(id="AI-LLM-VENDOR", type="ai_api", contains_phi=True,

region="us-east-1", vendor="LLM-Provider-X")

logs = PHINode(id="APP-LOGS", type="log_store", contains_phi=False, region="us-east-1")

flow = PHIDataFlow(use_case_id="AI-CLN-001", path=[ehr, api, logs])

print(flow.summary())Use these flow summaries in your risk assessment interviews and, later, as audit evidence.

4. Step 3 – Run a HIPAA AI Risk Assessment Per Use Case

With inventory and data flows in hand, you can now perform a HIPAA AI risk assessment for each use case:

For every AI use case, evaluate:

- Risk Analysis: threats to confidentiality, integrity, availability of PHI (model inversion, data leakage, prompt injection, vendor compromise).

- Access Control: who can run the AI, under what role, and from where.

- Integrity: how AI outputs that influence care are reviewed and documented.

- Transmission Security: TLS, certificate management, and endpoint exposure.

- BAAs & Vendors: scope of each BAA and subprocessor relationship.

Store your results in a machine-readable risk register.

Example HIPAA AI risk record (JSON):

{

"id": "RISK-AI-001",

"use_case_id": "AI-CLN-001",

"threat": "PHI leakage to external LLM vendor logs",

"hipaa_refs": ["164.308(a)(1)", "164.312(e)", "164.502(e)"],

"likelihood": "medium",

"impact": "high",

"inherent_score": 16,

"controls_in_place": [

"TLS 1.2+ to AI API",

"BAA with vendor"

],

"gaps": [

"No PHI minimization layer before API",

"Vendor log retention not documented"

],

"proposed_treatment": "mitigate",

"owner": "CISO",

"target_date": "2025-02-15"

}You can automate scoring to keep it consistent.

Python helper to compute risk scores and levels:

LIKELIHOOD = {"low": 1, "medium": 2, "high": 3}

IMPACT = {"low": 1, "medium": 2, "high": 3}

def compute_score(likelihood: str, impact: str) -> tuple[int, str]:

score = LIKELIHOOD[likelihood] * IMPACT[impact]

if score >= 7:

level = "high"

elif score >= 4:

level = "medium"

else:

level = "low"

return score, level

print(compute_score("medium", "high")) # (6, "medium")

print(compute_score("high", "high")) # (9, "high")This gives you a defensible, explainable HIPAA AI risk assessment that can be reused across frameworks (HIPAA, SOC 2, ISO 27001, etc.), in line with your unified risk register approach.

5. Step 4 – Turn AI Findings into a 30–60 Day Remediation Sprint

A risk assessment without a plan just piles up in your inbox. Your goal is a time-boxed remediation sprint for AI-related HIPAA risks, similar to the 12-week fix-first model you may already use for broader compliance findings.

5.1 Normalize findings

Combine AI-specific risks with your existing compliance risks so you avoid parallel plans.

Example: convert findings to a sprint-ready format (Python):

import csv

from datetime import date, timedelta

def to_sprint_items(risks, sprint_weeks=8):

start = date.today()

per_week = max(1, len(risks) // sprint_weeks)

items = []

week = 0

for i, r in enumerate(sorted(risks, key=lambda x: x["inherent_score"], reverse=True)):

if i % per_week == 0 and week < sprint_weeks - 1:

week += 1

due = start + timedelta(weeks=week)

items.append({

"key": r["id"],

"summary": f"[AI] {r['threat']}",

"owner": r["owner"],

"due_date": due.isoformat(),

"hipaa_refs": ",".join(r["hipaa_refs"]),

"use_case_id": r["use_case_id"]

})

return items

# later: export to CSV for Jira/GitHub import5.2 Export to your ticketing system

Sample CSV header for Jira import:

Summary,Description,Assignee,Due Date,Labels

"[AI] PHI leakage to LLM logs","Mitigate risk RISK-AI-001 for use case AI-CLN-001",ciso,2025-02-15,"hipaa,ai,security-rule"This turns the HIPAA AI risk assessment into a prioritized backlog that engineering, data, and compliance teams can execute over 30–60 days.

6. Step 5 – Implement High-Impact HIPAA AI Controls (With Code)

This is where most of the value sits: mapping Security Rule safeguards to AI-specific controls and actually shipping the changes.

6.1 Access controls & role-based AI usage

Restrict AI features that touch PHI to appropriate roles and contexts.

Example: Node.js middleware enforcing AI access by clinical role:

function requireClinicalRole(allowedRoles) {

return (req, res, next) => {

const user = req.user; // populated by your auth layer

if (!user || !allowedRoles.includes(user.role)) {

return res.status(403).json({ error: "AI feature not permitted" });

}

if (!user.mfa_verified) {

return res.status(403).json({ error: "MFA required for AI access" });

}

next();

};

}

// usage

app.post("/api/ai/triage",

requireClinicalRole(["clinician", "er_physician"]),

handleTriageRequest

);This ties directly into HIPAA’s technical safeguard for access control.

6.2 PHI minimization and redaction before AI APIs

Before sending any PHI to an external model, scrub what you can:

- Replace direct identifiers (name, MRN, address) with pseudonyms,

- Remove unnecessary demographics,

- Mask IDs where possible.

Example: simple PHI scrubbing in Python (NLP pipeline stub):

import re

from typing import Tuple

PHI_PATTERNS = [

(re.compile(r"\b\d{3}-\d{2}-\d{4}\b"), "<SSN>"),

(re.compile(r"\bMRN[:#]?\s*\d+\b", re.IGNORECASE), "<MRN>"),

(re.compile(r"\b(Dr\.?|Doctor)\s+[A-Z][a-z]+\b"), "<PROVIDER>"),

]

def scrub_phi(text: str) -> Tuple[str, dict]:

replacements = {}

for pattern, token in PHI_PATTERNS:

matches = set(pattern.findall(text))

for m in matches:

replacements.setdefault(token, set()).add(m)

text = pattern.sub(token, text)

return text, {k: list(v) for k, v in replacements.items()}

note = "Dr Smith saw MRN 123456 today; SSN 123-45-6789."

scrubbed, mapping = scrub_phi(note)

print(scrubbed)

# " <PROVIDER> saw <MRN> today; <SSN>."Use this scrubbed text for AI prompts, but keep a secure mapping server-side (with access controls and audit logs).

6.3 Secure transmission and endpoint exposure

Ensure all AI traffic is encrypted and fronted by a hardened gateway.

Example: Nginx AI gateway enforcing TLS and limiting origins:

server {

listen 443 ssl http2;

server_name ai-gateway.example.org;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers HIGH:!aNULL:!MD5:!3DES:!SHA1;

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Content-Type-Options nosniff;

add_header X-Frame-Options DENY;

# Only allow traffic from your app frontends

if ($http_origin !~* "https://(portal|ehr)\.example\.org") {

return 403;

}

location /v1/ai {

proxy_pass https://ai-provider.internal;

proxy_set_header X-Org-Id "your-org-id";

proxy_set_header X-Request-Id $request_id;

}

}This aligns with transmission security requirements and reduces your exposed surface area.

6.4 Logging and monitoring of AI activity

HIPAA expects information system activity review—that includes AI usage.

Log:

- Who invoked AI (user ID, role),

- When, from where (IP/device),

- Which AI use case and patient context,

- High-level outcome (e.g., “drafted discharge summary”), not full PHI.

Example: AI audit log table for PostgreSQL:

CREATE TABLE ai_audit_log (

id BIGSERIAL PRIMARY KEY,

ts TIMESTAMPTZ NOT NULL DEFAULT now(),

user_id TEXT NOT NULL,

user_role TEXT NOT NULL,

patient_id TEXT NOT NULL,

use_case_id TEXT NOT NULL,

prompt_hash TEXT NOT NULL,

result_hash TEXT NOT NULL,

decision_label TEXT, -- e.g. "triage_suggestion", "note_draft"

ip_address INET,

user_agent TEXT

);

CREATE INDEX idx_ai_audit_patient_ts

ON ai_audit_log (patient_id, ts DESC);Pair this with SIEM rules to detect anomalies (unusual AI usage patterns, mass exports, after-hours spikes).

6.5 Vendor and BAA governance for AI

Track AI vendors and their BAAs with the same rigor as any cloud PHI host.

Example: vendor register in YAML including AI-specific fields:

- vendor: "LLM-Provider-X"

service: "Hosted LLM for triage chatbot"

phi_access: true

baa_signed: true

baa_expiry: "2026-06-30"

data_location: ["us-east-1"]

log_retention_days: 30

sub_processors_reviewed: true

- vendor: "AI-Notes-SaaS"

service: "Ambient scribe for outpatient clinics"

phi_access: true

baa_signed: pending

data_location: ["us-central1"]

log_retention_days: 365

sub_processors_reviewed: falseDuring your HIPAA AI risk assessment, any AI vendor without a valid BAA or clear data handling story should be treated as high risk until proven otherwise.

7. Step 6 – Package HIPAA + AI Evidence for Auditors

Once the sprint is underway, you need a repeatable evidence kit so future audits (HIPAA, SOC 2, ISO 27001, EU AI Act) don’t become fresh, manual efforts every time.

Design a simple evidence structure, for example:

evidence/

01_scope/

ai_scope_statement.pdf

phi_systems_list.xlsx

02_inventory/

ai_use_case_inventory.yaml

ai_system_diagrams.pdf

03_risk_assessment/

hipaa_ai_risk_register.json

04_controls/

access_control_configs/

ai_gateway_configs/

phi_scrubber_code/

05_logs/

ai_audit_log_samples.csv

siem_alert_screenshots/

06_vendor/

baa_llm-provider-x.pdf

vendor_risk_reviews/You can automate hashing and indexing of these files for integrity:

Example: simple evidence indexer in Python:

import hashlib

import json

from pathlib import Path

def index_evidence(root="evidence"):

index = []

for path in Path(root).rglob("*"):

if path.is_file():

data = path.read_bytes()

digest = hashlib.sha256(data).hexdigest()

index.append({"path": str(path), "sha256": digest})

return index

with open("evidence_index.json", "w") as f:

json.dump(index_evidence(), f, indent=2)This evidence pack becomes part of your broader compliance risk assessment and remediation story, not a one-off AI binder.

8. Step 7 – Where Pentest Testing Corp Fits In

You don’t have to run the entire HIPAA AI risk assessment sprint alone.

Pentest Testing Corp can help you:

- Run a HIPAA-aligned compliance risk assessment across AI, web, API, and cloud platforms, using the same Risk Assessment Services for HIPAA, PCI DSS, SOC 2, ISO 27001 & GDPR that you use for other systems.

- Design and execute a fix-first remediation sprint for AI findings via our Remediation Services, focusing on encryption, logging, access controls, and policy updates.

- Integrate AI-specific risks into a unified risk register and evidence pack that satisfies multiple frameworks at once.

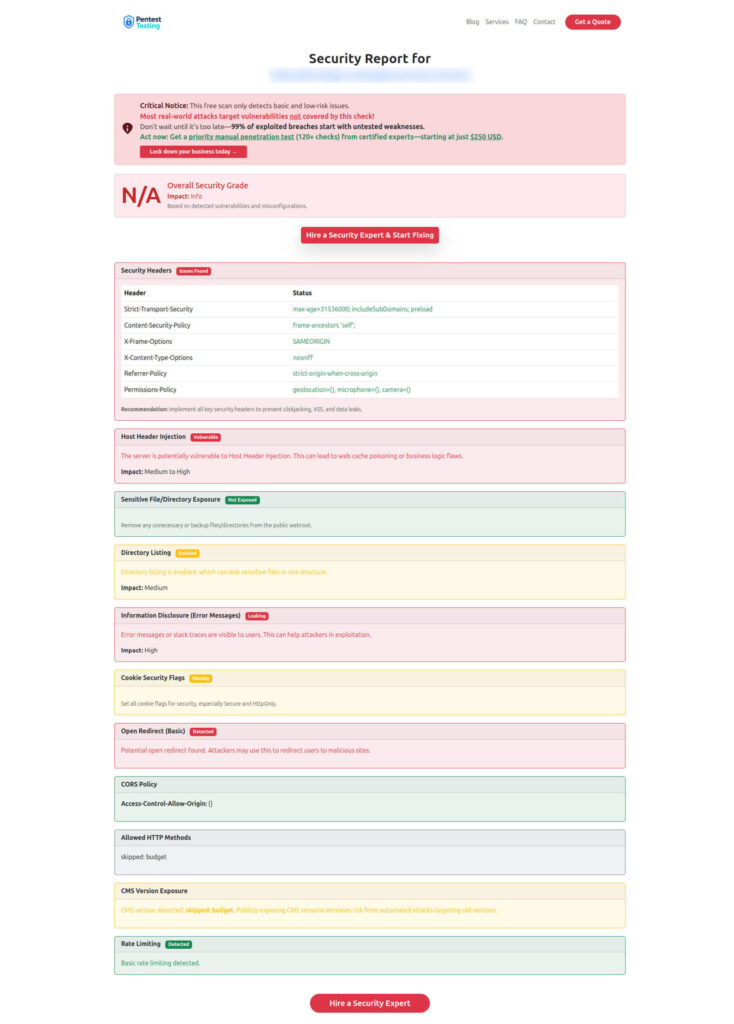

9. Using the Free Website Vulnerability Scanner in Your HIPAA AI Story

Many AI features are exposed via patient portals, clinician consoles, or APIs on the public internet. Before—or during—your sprint, you can use Pentest Testing Corp’s free Website Vulnerability Scanner for a quick hygiene check on those surfaces.

Screenshot of the free tools landing page

Sample assessment report to check Website Vulnerability

10. Related Reading on Pentest Testing Corp

- EU AI Act SOC 2: 7 Proven Steps to AI Governance – for teams juggling HIPAA with EU AI Act and SOC 2 obligations.

https://www.pentesttesting.com/eu-ai-act-soc-2/ - 12-Week Fix-First Compliance Risk Assessment Remediation – how to turn large assessments into structured sprints (extend it with AI findings).

https://www.pentesttesting.com/compliance-risk-assessment-remediation/ - 7 Proven Steps to a Unified Risk Register in 30 Days – build one register for HIPAA, PCI DSS, SOC 2, ISO 27001, GDPR, and now AI.

https://www.pentesttesting.com/unified-risk-register-in-30-days/ - HIPAA Remediation 2025: 14-Day Proven Security Rule Sprint – deeper dive into Security Rule remediation patterns you can reuse for AI workloads.

https://www.pentesttesting.com/hipaa-remediation-2025/

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about HIPAA + AI Risk Assessment.