12-Week Fix-First Compliance Risk Assessment Remediation

Why “Fix-First Security” After a Compliance Risk Assessment?

Your latest HIPAA, PCI DSS, SOC 2, ISO 27001, or GDPR compliance risk assessment lands in your inbox. It’s usually a spreadsheet: rows of risks, colours, and comments.

What you actually need is a 12-week, fix-first remediation sprint that:

- Reduces real risk across all five frameworks

- Produces audit-ready evidence as you go

- Improves future pentest outcomes instead of just passing this year’s check

This guide walks security and compliance leaders through a practical compliance risk assessment remediation approach:

- Normalize findings from your latest assessment

- Tag each item by framework + business impact

- Plan a 12-week remediation sprint

- Turn findings into tickets with owners and due dates

- Capture evidence automatically as fixes ship

Along the way, we’ll show code examples you can adapt in your environment, and how to plug in Pentest Testing Corp’s Risk Assessment Services and Remediation Services to keep the program moving.

TL;DR: 12-Week Fix-First Blueprint

- Input: Your latest compliance risk assessment (HIPAA/PCI/SOC 2/ISO 27001/GDPR)

- Output: A 12-week remediation sprint with:

- Prioritized backlog

- Clear ownership per finding

- Evidence folders per framework

- Loop: Assess → Prioritize → Remediate → Verify, then repeat every 6–12 months

Step 1 – Normalize Your Compliance Risk Assessment Findings

Most organisations receive assessment output as an Excel sheet, a GRC export, and a few PDF reports. Before you can plan remediation, normalize everything into a single findings dataset.

1.1 Define a unified finding schema

Start with a JSON/YAML schema that works across HIPAA, PCI DSS, SOC 2, ISO 27001, and GDPR:

# findings.yml

- id: "F-0012"

title: "Production S3 bucket allows public read"

asset: "prod-logs-bucket"

source: "ISO 27001 risk assessment Q4"

frameworks: ["ISO 27001"]

categories: ["Cloud", "Access Control"]

likelihood: 4 # 1–5

impact: 5 # 1–5

business_impact: "Revenue, regulatory, brand"

external_exposure: true

status: "Open"

owner: "cloud_sec_lead"

evidence: []1.2 Convert assessment CSV to normalized JSON

Assume your auditor exported a CSV with columns like Risk ID, Title, Framework, Asset, Likelihood, Impact, Business Impact.

# normalize_assessment.py

import csv, json

from pathlib import Path

def normalize(csv_path: str, json_path: str) -> None:

findings = []

with open(csv_path, newline="", encoding="utf-8") as f:

reader = csv.DictReader(f)

for row in reader:

frameworks = [

fw.strip()

for fw in row.get("Framework", "").replace(";", ",").split(",")

if fw.strip()

] or ["UNMAPPED"]

findings.append({

"id": row["Risk ID"],

"title": row["Title"],

"asset": row.get("Asset", ""),

"source": row.get("Source", "Compliance risk assessment"),

"frameworks": frameworks,

"categories": [c.strip() for c in row.get("Category", "").split(",") if c],

"likelihood": int(row.get("Likelihood", 3)),

"impact": int(row.get("Impact", 3)),

"business_impact": row.get("Business Impact", "").strip(),

"external_exposure": row.get("External", "No").lower() in ("yes", "true", "1"),

"status": row.get("Status", "Open") or "Open",

"owner": row.get("Owner", "") or "unassigned",

"evidence": [],

})

Path(json_path).write_text(json.dumps(findings, indent=2), encoding="utf-8")

if __name__ == "__main__":

normalize("assessment_export.csv", "findings.json")This gives you one canonical file (findings.json) as the input to your 12-week compliance risk assessment remediation sprint.

Step 2 – Tag Findings by Framework & Business Impact

You now want each finding clearly mapped to HIPAA, PCI DSS, SOC 2, ISO 27001, and GDPR, plus a triage score that takes business impact into account.

2.1 Auto-tag frameworks based on description

Even if your assessor tagged frameworks, it’s useful to check and enrich them programmatically:

# tag_frameworks.py

import json

from pathlib import Path

KEYWORDS = {

"HIPAA": ["phi", "patient", "ephi", "clinic", "ehr"],

"PCI DSS": ["cardholder", "cde", "pan", "pci"],

"SOC 2": ["saas", "customer data", "trust services", "soc 2"],

"ISO 27001": ["isms", "annex a", "iso 27001"],

"GDPR": ["gdpr", "eu data", "data subject", "dpo", "article 32"],

}

def enrich_frameworks(finding):

text = (finding["title"] + " " + finding.get("business_impact", "")).lower()

auto = set(finding.get("frameworks", []))

for fw, words in KEYWORDS.items():

if any(w in text for w in words):

auto.add(fw)

# Ensure at least ISO 27001 as baseline for general ISMS work

if not auto:

auto.add("ISO 27001")

finding["frameworks"] = sorted(auto)

def main():

data = json.loads(Path("findings.json").read_text(encoding="utf-8"))

for f in data:

enrich_frameworks(f)

Path("findings_tagged.json").write_text(json.dumps(data, indent=2), encoding="utf-8")

if __name__ == "__main__":

main()2.2 Compute a simple risk score for prioritization

Use a consistent formula that avoids “spreadsheet politics”:

# score_findings.py

import json

from pathlib import Path

FRAMEWORK_WEIGHTS = {

"HIPAA": 1.3,

"PCI DSS": 1.4,

"SOC 2": 1.2,

"ISO 27001": 1.1,

"GDPR": 1.4,

}

def score_finding(f):

base = f["likelihood"] * f["impact"]

max_weight = max((FRAMEWORK_WEIGHTS.get(fw, 1.0) for fw in f["frameworks"]), default=1.0)

# Extra bump if internet-exposed

exposure_boost = 2 if f.get("external_exposure") else 0

return int(base * max_weight) + exposure_boost

def main():

data = json.loads(Path("findings_tagged.json").read_text(encoding="utf-8"))

for f in data:

f["score"] = score_finding(f)

if f["score"] >= 25:

f["tier"] = "Critical"

elif f["score"] >= 18:

f["tier"] = "High"

elif f["score"] >= 10:

f["tier"] = "Medium"

else:

f["tier"] = "Low"

Path("findings_scored.json").write_text(json.dumps(data, indent=2), encoding="utf-8")

if __name__ == "__main__":

main()2.3 SQL view for dashboard summaries

Once findings are in a database table, create a quick view grouped by framework and tier:

-- Table: findings(id, title, frameworks, tier, score, status)

-- frameworks stored as text[] or comma-separated string

CREATE VIEW framework_risk_summary AS

SELECT

fw AS framework,

tier,

COUNT(*) AS total_findings

FROM (

SELECT

id,

tier,

UNNEST(STRING_TO_ARRAY(frameworks, ',')) AS fw

FROM findings

) f

GROUP BY fw, tier

ORDER BY fw, tier;This gives leadership a simple dashboard of where compliance risk assessment remediation work is concentrated.

Step 3 – Design the 12-Week Remediation Sprint

With scored findings, you can design a 12-week remediation sprint that’s realistic but aggressive.

Think in four phases:

- Weeks 1–3 – Stabilise & quick wins

- Weeks 4–6 – Core control gaps

- Weeks 7–9 – Cross-framework hardening

- Weeks 10–12 – Verification & evidence

3.1 Map findings to weeks programmatically

You can auto-assign a “target week” using score, external exposure, and framework:

# plan_12_week_sprint.py

import json

from pathlib import Path

def assign_week(f):

# Critical, externally exposed → Weeks 1–2

if f["tier"] == "Critical":

return 1 if f.get("external_exposure") else 2

# High risk → Weeks 3–5

if f["tier"] == "High":

return 3

# Medium → Weeks 6–9 (spread over several sprints)

if f["tier"] == "Medium":

return 6

# Low → Weeks 10–12

return 10

def main():

data = json.loads(Path("findings_scored.json").read_text(encoding="utf-8"))

for f in data:

f["target_week"] = assign_week(f)

Path("remediation_plan.json").write_text(json.dumps(data, indent=2), encoding="utf-8")

if __name__ == "__main__":

main()You can then slice by week when planning each sprint:

import json

with open("remediation_plan.json", encoding="utf-8") as f:

findings = json.load(f)

week = 1

week_backlog = [f for f in findings if f["target_week"] == week and f["status"] == "Open"]

print(f"Week {week} backlog:")

for item in week_backlog:

print(f"- [{item['tier']}] {item['id']} – {item['title']} ({', '.join(item['frameworks'])})")3.2 What to tackle in each phase

- Weeks 1–3 – Stabilise & quick wins

- External-facing misconfigurations (TLS, WAF, open buckets, exposed admin panels)

- High-impact identity and access issues (MFA gaps, excess admin accounts)

- Anything blocking a clean external pentest

- Weeks 4–6 – Core control gaps

- Logging, monitoring, and retention across in-scope systems

- Change management and deployment controls

- Baseline encryption at rest and in transit

- Weeks 7–9 – Cross-framework hardening

- Policy updates, joiner–mover–leaver flows, vendor due diligence

- Data lifecycle controls (retention, purging, export)

- Mapping each major fix to HIPAA, PCI DSS, SOC 2, ISO 27001, GDPR requirements

- Weeks 10–12 – Verify & package evidence

- Internal checks + targeted pentests

- Evidence collection and mapping per framework

- Final walkthrough with your assessor/auditor

For deeper detail on structuring your risk data, you can pair this article with “7 Proven Steps to a Unified Risk Register in 30 Days”:

https://www.pentesttesting.com/unified-risk-register-in-30-days/

Step 4 – Turn the Plan Into Tickets With Owners and Deadlines

Your 12-week compliance risk assessment remediation sprint lives and dies by ownership.

4.1 Represent remediation items as ticket payloads

{

"risk_id": "F-0012",

"summary": "[F-0012] Lock down public S3 bucket",

"description": "Public read access is enabled on prod-logs-bucket.\n\nFrameworks: PCI DSS, SOC 2, ISO 27001\nTier: High\nTarget week: 3\n\nAcceptance criteria:\n- Public access disabled\n- SSE-KMS enabled\n- Evidence (CLI output + screenshot) attached.",

"owner": "cloud_sec_lead",

"target_week": 3,

"status": "Planned",

"frameworks": ["PCI DSS", "SOC 2", "ISO 27001"]

}4.2 Simple Python exporter → CSV for Jira/GitHub

# export_backlog.py

import csv, json

from pathlib import Path

FIELDS = ["Key", "Summary", "Description", "Assignee", "Labels"]

def main():

data = json.loads(Path("remediation_plan.json").read_text(encoding="utf-8"))

rows = []

for f in data:

if f["status"] != "Open":

continue

labels = ["ComplianceRemediation", f"Week-{f['target_week']}"] + f["frameworks"]

rows.append({

"Key": "",

"Summary": f"[{f['id']}] {f['title']}",

"Description": f"Tier: {f['tier']}\nFrameworks: {', '.join(f['frameworks'])}\nTarget week: {f['target_week']}",

"Assignee": f.get("owner", "unassigned"),

"Labels": ",".join(labels),

})

with open("backlog_import.csv", "w", newline="", encoding="utf-8") as f:

writer = csv.DictWriter(f, fieldnames=FIELDS)

writer.writeheader()

writer.writerows(rows)

if __name__ == "__main__":

main()4.3 Example JQL to drive the board

project = SEC

AND labels in (ComplianceRemediation)

ORDER BY "Target week" ASC, priority DESCThis creates a single remediation board for your entire compliance scope. For a 90-day risk-register–centric variant, see “5 Proven Steps for a Risk Register Remediation Plan”: https://www.pentesttesting.com/risk-register-remediation-plan/

Step 5 – Generate Audit-Ready Evidence as You Fix

You don’t want to do the hard remediation work and then scramble for evidence at the end of the 12 weeks.

5.1 Standard evidence folder structure

mkdir -p evidence/{10-scope,20-controls,30-logs,40-policies,50-tickets,60-screenshots}5.2 Lightweight evidence indexer with hashes

# build_evidence_index.py

from pathlib import Path

import hashlib

import time

ROOT = Path("evidence")

INDEX = ROOT / "00-index.md"

ALLOWED_EXT = {".pdf", ".png", ".jpg", ".txt", ".csv", ".json", ".yaml", ".yml", ".md"}

lines = ["# Evidence Index\n"]

for path in sorted(ROOT.rglob("*")):

if path.is_file() and path.suffix.lower() in ALLOWED_EXT:

rel = path.relative_to(ROOT)

digest = hashlib.sha256(path.read_bytes()).hexdigest()[:12]

mtime = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime(path.stat().st_mtime))

lines.append(f"- {rel}\n - sha256: `{digest}`\n - mtime: {mtime}\n")

INDEX.write_text("\n".join(lines), encoding="utf-8")Run this as part of a nightly CI job so you always have an up-to-date evidence index for auditors.

5.3 SQL view for weekly progress

-- Table remediation_items(risk_id, framework, tier, target_week, status, closed_at)

CREATE VIEW weekly_progress AS

SELECT

target_week,

framework,

COUNT(*) FILTER (WHERE status <> 'Closed') AS open_items,

COUNT(*) FILTER (WHERE status = 'Closed') AS closed_items

FROM remediation_items

GROUP BY target_week, framework

ORDER BY target_week, framework;This provides leadership with a simple weekly view of how the 12-week remediation sprint is progressing per framework.

Where Pentest Testing Corp Fits Into the 12-Week Sprint

A 12-week compliance risk assessment remediation sprint is achievable, but many teams lack time or specialised staff.

At Pentest Testing Corp, we offer:

- Risk Assessment Services for HIPAA, PCI, SOC 2, ISO 27001, GDPR – to run or refresh your formal risk assessment and produce a clean, normalized findings set: https://www.pentesttesting.com/risk-assessment-services/

- Remediation Services for HIPAA, PCI, SOC 2, ISO 27001, GDPR – to co-own the 12-week remediation sprint, implement controls, and package evidence aligned to each framework: https://www.pentesttesting.com/remediation-services/

- Deep manual web, API, mobile, network, and cloud penetration testing with a free retest, so you can verify that controls put in place during the sprint are effective and exploitable gaps are closed.

How the Free Website Vulnerability Scanner Supports Your Sprint

Alongside formal assessments, quick external snapshots are invaluable.

Screenshot: Free tool Landing page

(Visitors can access the live tool at https://free.pentesttesting.com/ for an instant vulnerability snapshot without creating an account.)

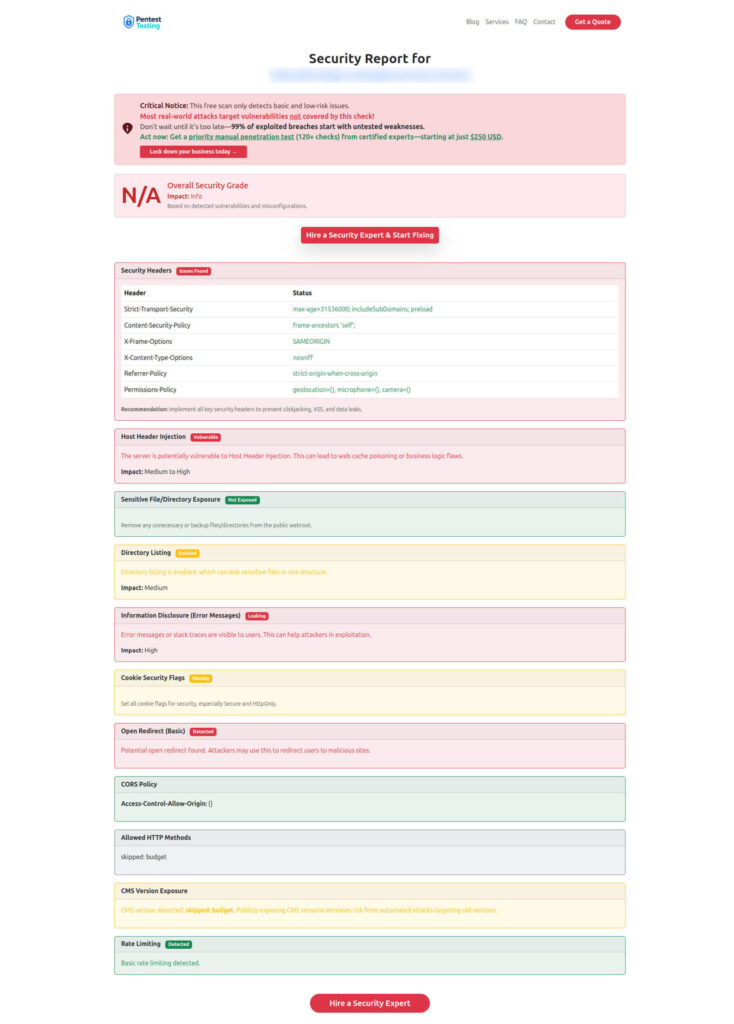

Sample report from the free tool to check Website Vulnerability

These visuals clearly demonstrate to stakeholders how lightweight scans and structured 12-week remediation sprints work together.

How This 12-Week Loop Improves Future Pentests

When you run this assess → prioritize → remediate → verify loop every 6–12 months:

- Pentest findings trend downward for repeat issues (e.g., TLS, missing headers, open ports)

- Auditors see a mature remediation process instead of a one-off clean-up

- Leadership gets a simple risk reduction narrative: scores by framework and week, mapped directly to real fixes

For a HIPAA-specific variation, you can pair this plan with “HIPAA Remediation 2025: 14-Day Proven Security Rule Sprint”:

https://www.pentesttesting.com/hipaa-remediation-2025/

For a deeper dive into risk registers and unified sprints, see:

- 7 Proven Steps to a Unified Risk Register in 30 Days

https://www.pentesttesting.com/unified-risk-register-in-30-days/

If you’re also working on AI governance, don’t miss our new guide on how to align EU AI Act SOC 2 requirements and prove AI governance to auditors in 60 days: EU AI Act SOC 2: 7 Proven Steps to AI Governance.

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about Compliance Risk Assessment Remediation & 12-Week Sprints.