7 Essential SEC Cyber Disclosure Steps for 8-K

Investor and regulator expectations have changed: a breach is no longer “just” an incident response (IR) problem. It becomes a SEC cyber disclosure problem, a board governance problem, and sometimes—especially for public or IPO-bound companies—a litigation problem.

This playbook (inspired by patterns seen in high-profile e-commerce incidents like the Coupang case) shows how to produce Form 8-K Item 1.05-ready evidence without slowing containment. It is not legal advice; treat it as an operational blueprint your legal team can plug into.

If you’re working through edge exposure risks, don’t miss our step-by-step sprint guide on misconfigured edge devices—covering inventory, pentest validation, hardening, monitoring, and audit-ready evidence: https://www.pentesttesting.com/misconfigured-edge-devices-hardening-sprint/

Why cyber incidents now create disclosure and lawsuit risk

When a public-company incident hits headlines, three things happen fast:

- Materiality pressure: leadership must decide whether the incident is “material” and whether to file an 8-K.

- Narrative risk: inconsistent statements across security, legal, and investor relations get compared line-by-line.

- Proof demand: stakeholders want evidence that claims (scope, impact, containment, fixes) are supported by logs, tickets, and control artifacts.

Where teams usually fail

- They “decide materiality” verbally, but don’t document inputs (impact, scope, duration, customer harm, financial exposure).

- They preserve some logs, but lack a repeatable evidence pack (hashes, chain-of-custody, timeline).

- They communicate quickly, but don’t anchor statements to verifiable facts.

If you want a disclosure-ready assessment of your current incident readiness, start with a targeted risk review:

- Risk assessment services: https://www.pentesttesting.com/risk-assessment-services/

- Remediation services: https://www.pentesttesting.com/remediation-services/

Step 1) Build a materiality decision workflow (and document it)

A reliable SEC cyber disclosure workflow is less about “the perfect framework” and more about a repeatable meeting + documented rationale.

Minimum roles (RACI-style)

- Decision owner: CEO/CFO (or delegated exec) + legal counsel

- Inputs: CISO/IR lead, finance, privacy, product/ops, customer support

- Evidence steward: IR lead + GRC (keeps the evidence pack consistent)

- Approver for public statements: legal + comms + investor relations

Practical inputs to capture (the “materiality checklist”)

- Business impact (revenue interruption, churn, refunds, SLA penalties)

- Data impact (type of data, customer count, jurisdictions, contract exposure)

- Operational impact (downtime, degraded service, supply chain)

- Threat actor behavior (extortion, data publication, repeat access)

- Control failure context (what broke, how detected, whether persistent)

Template: materiality meeting notes (Markdown)

# Materiality Notes - Incident INC-2025-XXXX

Date/time:

Attendees:

Decision: Material / Not material / Pending

Reasoning (plain language):

- Impact summary:

- Known unknowns:

- Next decision checkpoint:

Evidence references (links to pack):

- Timeline: evidence/timeline.csv

- Scope notes: evidence/scope.md

- Control proof: evidence/control-proof/Code example: generate a signed “materiality memo” from structured inputs (Python)

Run only in authorized environments. Store outputs in your legal hold location.

# materiality_memo.py

import json, hashlib, datetime

from pathlib import Path

def sha256(path: Path) -> str:

h = hashlib.sha256()

with path.open("rb") as f:

for chunk in iter(lambda: f.read(1024 * 1024), b""):

h.update(chunk)

return h.hexdigest()

def build_memo(inp: dict) -> str:

now = datetime.datetime.utcnow().strftime("%Y-%m-%dT%H:%M:%SZ")

lines = [

f"Materiality Memo - {inp['incident_id']}",

f"Generated (UTC): {now}",

"",

f"Decision: {inp['decision']}",

"",

"Rationale:",

inp["rationale"].strip(),

"",

"Inputs:",

f"- Estimated financial exposure: {inp.get('financial_exposure','unknown')}",

f"- Customer/data impact: {inp.get('data_impact','unknown')}",

f"- Operational impact: {inp.get('operational_impact','unknown')}",

f"- Threat actor behavior: {inp.get('threat_actor','unknown')}",

"",

"Next checkpoint:",

inp.get("next_checkpoint","TBD"),

""

]

return "\n".join(lines)

if __name__ == "__main__":

inp_path = Path("materiality_input.json")

inp = json.loads(inp_path.read_text(encoding="utf-8"))

out_dir = Path("evidence/materiality")

out_dir.mkdir(parents=True, exist_ok=True)

memo_path = out_dir / f"{inp['incident_id']}_materiality_memo.txt"

memo_path.write_text(build_memo(inp), encoding="utf-8")

# “Proof of integrity” (hash)

(memo_path.with_suffix(".sha256")).write_text(

f"{sha256(memo_path)} {memo_path.name}\n", encoding="utf-8"

)

print("Wrote:", memo_path)Example materiality_input.json:

{

"incident_id": "INC-2025-0042",

"decision": "Pending",

"rationale": "We have confirmed unauthorized access to a production admin portal. Impact is still being scoped.",

"financial_exposure": "TBD (refunds + response costs)",

"data_impact": "Potential exposure of customer contact data; no payment data confirmed.",

"operational_impact": "No downtime; increased fraud monitoring costs.",

"threat_actor": "Credential misuse; no extortion received yet.",

"next_checkpoint": "24 hours after scope confirmation"

}Step 2) Create the “8-K evidence pack” (minimum artifacts to preserve)

Think of the evidence pack as a single source of truth that supports what you tell regulators, investors, customers, and auditors.

Evidence pack folder structure (starter)

evidence/

README.md

manifest.csv

chain_of_custody.md

timeline/

timeline.csv

timeline_sources.md

scope/

scope.md

ioc_list.txt

affected_assets.csv

impact/

customer_counts.csv

downtime_metrics.csv

financial_estimates.md

containment/

actions_taken.md

tickets/

third_parties/

vendor_notifications.md

forensics_reports/

control-proof/

logging/

access/

backups/

segmentation/Code example: initialize the pack + generate a manifest with hashes

# evidence_pack_init.sh

set -euo pipefail

mkdir -p evidence/{timeline,scope,impact,containment,third_parties,control-proof}

touch evidence/README.md evidence/chain_of_custody.md

printf "path,sha256\n" > evidence/manifest.csv# manifest_hash.py

import csv, hashlib

from pathlib import Path

def sha256_file(p: Path) -> str:

h = hashlib.sha256()

with p.open("rb") as f:

for chunk in iter(lambda: f.read(1024 * 1024), b""):

h.update(chunk)

return h.hexdigest()

root = Path("evidence")

rows = []

for p in root.rglob("*"):

if p.is_file() and p.name not in {"manifest.csv"}:

rows.append((str(p), sha256_file(p)))

with (root / "manifest.csv").open("a", newline="", encoding="utf-8") as f:

w = csv.writer(f)

for r in sorted(rows):

w.writerow(r)

print("Updated evidence/manifest.csv")Step 3) Build a defensible timeline without slowing containment

The goal is not “perfect chronology.” The goal is good-enough, provable chronology that can be iterated as facts change.

What to include in the timeline

- First detection (alert, user report, vendor report)

- Earliest confirmed malicious action (from logs)

- Containment actions (token resets, blocks, segmentation, credential rotation)

- Scope expansion events (new affected system discovered)

- Third-party handoffs (forensics engaged, law enforcement contact if any)

Template: timeline CSV

utc_time,source,actor,event,asset,confidence,ref

2025-12-01T03:12:54Z,SIEM,unknown,Admin login from new ASN,admin-portal,high,logs/splunk_event_123

2025-12-01T03:20:10Z,IR,tier1,Disabled suspected account,IdP,high,ticket/INC-42Code example: merge events from JSON sources into a timeline (Python)

# build_timeline.py

import csv, json

from pathlib import Path

def load_events(path: Path):

data = json.loads(path.read_text(encoding="utf-8"))

for e in data:

# expects utc_time ISO string

yield e

sources = list(Path("timeline_sources").glob("*.json"))

events = []

for s in sources:

events.extend(list(load_events(s)))

events.sort(key=lambda e: e["utc_time"])

out = Path("evidence/timeline/timeline.csv")

out.parent.mkdir(parents=True, exist_ok=True)

with out.open("w", newline="", encoding="utf-8") as f:

w = csv.writer(f)

w.writerow(["utc_time","source","actor","event","asset","confidence","ref"])

for e in events:

w.writerow([

e.get("utc_time",""),

e.get("source",""),

e.get("actor",""),

e.get("event",""),

e.get("asset",""),

e.get("confidence",""),

e.get("ref","")

])

print("Wrote", out)Step 4) Insider or credential misuse: investigation steps + control proof auditors expect

Credential misuse (stolen session, reused password, leaked API key, rogue admin) is where SEC cyber disclosure claims can get fragile because you need to prove both (1) what happened and (2) what controls were in place.

Investigation steps (practical order)

- Freeze: preserve IdP logs, admin audit logs, endpoint telemetry, VPN logs.

- Constrain: revoke sessions/refresh tokens, rotate keys, disable suspect accounts.

- Prove access path: phishing? token theft? password reuse? insider?

- Map blast radius: what was accessed, downloaded, modified.

- Control proof: show access reviews, MFA enforcement, logging retention, SoD.

Real-time queries you can run (examples)

Splunk (SPL): new admin logins + impossible travel (generic pattern)

index=auth (action=success) (user=*admin* OR role=admin)

| eval geo=coalesce(src_country,"unknown")

| stats earliest(_time) as first latest(_time) as last values(src_ip) as ips values(geo) as countries by user

| where mvcount(ips) > 2 OR mvcount(countries) > 1Microsoft Sentinel / Log Analytics (KQL): risky sign-ins + privilege role changes

SigninLogs

| where ResultType == 0

| where RiskLevelDuringSignIn in ("medium","high")

| project TimeGenerated, UserPrincipalName, IPAddress, Location, AppDisplayName, RiskLevelDuringSignIn

| order by TimeGenerated descAuditLogs

| where OperationName has "Add member to role" or OperationName has "Update user"

| project TimeGenerated, InitiatedBy, OperationName, TargetResources

| order by TimeGenerated descAWS CloudTrail (Athena SQL): suspicious credential activity (starter)

SELECT eventtime, eventname, useridentity.arn, sourceipaddress, useragent

FROM cloudtrail_logs

WHERE eventname IN ('ConsoleLogin','AssumeRole','CreateAccessKey','PutUserPolicy','AttachRolePolicy')

ORDER BY eventtime DESC

LIMIT 200;Controls auditors (and investors) expect to see evidence for

- Access review evidence (who had admin, when reviewed, what changed)

- MFA enforcement policy evidence (especially for privileged roles)

- Logging retention and immutability for security logs

- Separation of duties (no single admin can approve + deploy + audit)

- Privileged access management or at least break-glass controls

Code example: quick privileged access snapshot (CSV) from a simple source

If your IAM/IdP exports users to CSV, keep a point-in-time snapshot with a hash.

# create a snapshot and hash it (example)

cp exports/privileged_users.csv evidence/control-proof/access/privileged_users_2025-12-01.csv

sha256sum evidence/control-proof/access/privileged_users_2025-12-01.csv > evidence/control-proof/access/privileged_users_2025-12-01.sha256Step 5) Align comms: security + legal + comms + investor relations

A strong SEC cyber disclosure posture uses one operational rule:

One incident record. Many outputs.

Practical “single source of truth” fields

- Incident ID, dates (detected, contained, eradicated)

- What systems/data were involved (confirmed vs suspected)

- What actions were taken (containment + remediation)

- What remains unknown (and next checkpoint)

- Evidence references (pack paths)

Code example: keep incident facts in YAML (then render to comms)

# incident_facts.yaml

incident_id: INC-2025-0042

status: Containment in progress

detected_utc: 2025-12-01T03:12:54Z

systems:

confirmed: ["admin-portal", "idp"]

suspected: ["support-dashboard"]

data_impact:

confirmed: "None confirmed"

suspected: "Customer contact data"

actions_taken:

- "Disabled suspect account"

- "Revoked sessions"

- "Rotated API keys"

next_checkpoint_utc: 2025-12-02T03:00:00Z# render_status_update.py

import yaml

from pathlib import Path

facts = yaml.safe_load(Path("incident_facts.yaml").read_text(encoding="utf-8"))

msg = f"""INCIDENT UPDATE [{facts['incident_id']}]

Status: {facts['status']}

Detected (UTC): {facts['detected_utc']}

Confirmed systems: {', '.join(facts['systems']['confirmed'])}

Suspected systems: {', '.join(facts['systems']['suspected'])}

Data impact (confirmed): {facts['data_impact']['confirmed']}

Data impact (suspected): {facts['data_impact']['suspected']}

Actions taken: {', '.join(facts['actions_taken'])}

Next checkpoint (UTC): {facts['next_checkpoint_utc']}

"""

print(msg)Step 6) Validate statements with independent testing

The safest way to avoid over-claiming is to validate the fixes and controls you reference.

Validation examples:

- Retest the exploited path (in a safe, authorized test environment)

- Confirm logging is capturing the relevant events (and stored immutably)

- Verify least privilege changes actually reduced blast radius

- Run a focused penetration test or control validation sprint

If you need independent validation to support stakeholder statements, start here:

- Disclosure-ready risk assessment: https://www.pentesttesting.com/risk-assessment-services/

- Hands-on remediation support: https://www.pentesttesting.com/remediation-services/

Step 7) Post-incident closure: remediation plan, board cadence, and re-testing

A closed incident should leave behind:

- A prioritized remediation plan (owners, dates, dependencies)

- A board-ready control improvement summary

- A re-test schedule and evidence of fixes

Template: remediation tracker row (CSV)

finding,root_cause,fix_owner,due_date,control,validation_method,status

Admin portal allowed legacy auth,Policy drift,Platform Eng,2026-01-10,Access control,Pentest retest + log proof,In progressCode example: generate a board summary table from the remediation tracker (Python)

# board_summary.py

import csv

from collections import Counter

from pathlib import Path

rows = []

with Path("remediation_tracker.csv").open(newline="", encoding="utf-8") as f:

for r in csv.DictReader(f):

rows.append(r)

status_counts = Counter(r["status"] for r in rows)

print("Remediation status summary:")

for k, v in status_counts.items():

print(f"- {k}: {v}")

print("\nTop controls impacted:")

controls = Counter(r["control"] for r in rows)

for k, v in controls.most_common(5):

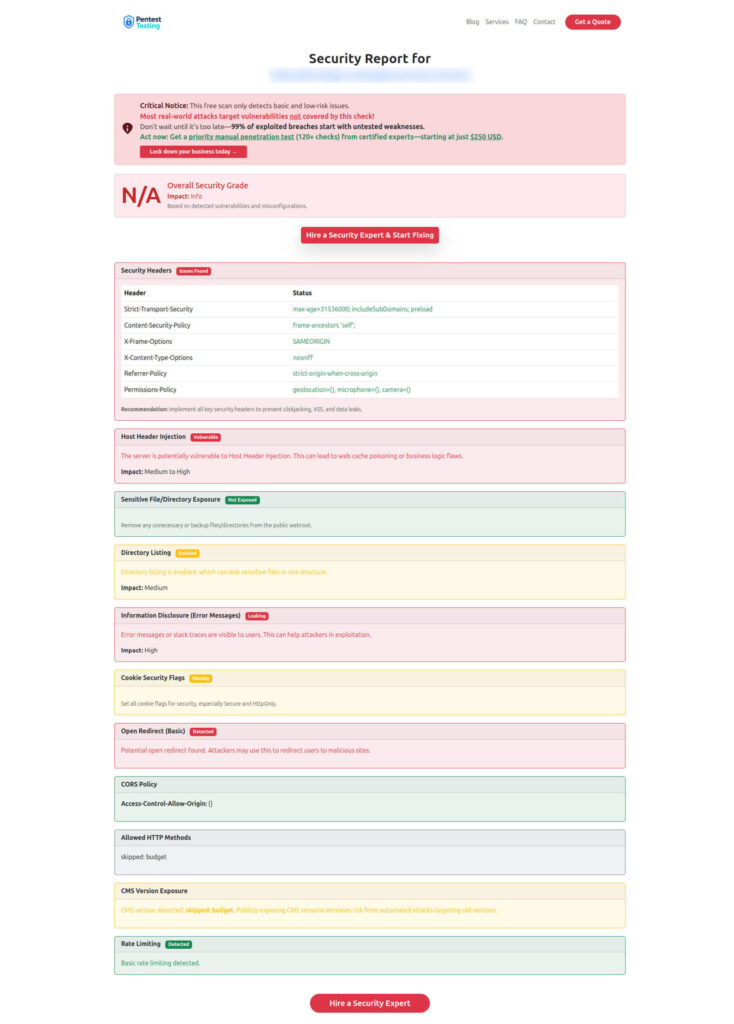

print(f"- {k}: {v}")Add-on: Use our free scanner for quick baseline evidence

Free Website Vulnerability Scanner Dashboard Screenshot

Sample Report Screenshot to check Website Vulnerability

Related reading from our blog

- AI cloud security risks: https://www.pentesttesting.com/ai-cloud-security-risks-modern-pentest/

- Extortion breach response playbook: https://www.pentesttesting.com/extortion-breach-playbook/

- React2Shell CVE rapid fix steps: https://www.pentesttesting.com/react2shell-cve-2025-55182-fix-steps/

- Browse all posts: https://www.pentesttesting.com/blog/

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about the SEC Cyber Disclosure Playbook for 8-K.