7 Powerful Risk-Driven API Throttling Tactics

Traditional rate limiting answers one question: “How many requests per minute?”

Attackers are asking a different question: “How do I look normal while I drain value?”

That’s why risk-driven API throttling matters. Instead of punishing every client equally, you adapt control strength to risk—based on identity confidence, behavior, endpoint sensitivity, and real-time signals. The goal is simple:

- Protect production APIs from abuse

- Avoid breaking legitimate customers

- Generate usable evidence for detection and forensics

If you want an expert review of your current controls, start with a structured gap assessment:

https://www.pentesttesting.com/risk-assessment-services/

Or validate your API security end-to-end with:

https://www.pentesttesting.com/api-pentest-testing-services/

Why traditional rate limiting isn’t enough

A flat “100 req/min per IP” policy fails in production because:

- Bots rotate IPs (residential proxies, cloud fleets, NAT pools)

- Credential stuffing is “low and slow” (many accounts, low volume per IP)

- Scraper fleets distribute load (thousands of identities, each “within limits”)

- Logic abuse isn’t volumetric (e.g., cart manipulation, promo validation, OTP spam)

Risk-driven API throttling fixes this by throttling based on risk, not just volume.

Abuse taxonomy: what you’re actually defending

Think in two categories:

1) Volumetric abuse

- brute traffic floods at the API edge

- endpoint hammering (search, export, list APIs)

- queue exhaustion

2) Logic abuse (often more damaging)

- credential reuse + session cycling

- automated mutation attacks (parameter variations to bypass caching or detection)

- enumeration (usernames, IDs, invoices, promo codes, OTPs)

- scrapers copying priced content, inventory, listings, or proprietary data

Your throttling strategy should treat login, OTP, password reset, search, export, and payments as high-risk surfaces even at low request rates.

Designing risk-driven throttling: signals that actually work

A good risk model doesn’t need “perfect device fingerprinting.” It needs stable, explainable signals you can log and tune.

Practical signals (mix-and-match)

Identity confidence

- authenticated user ID vs anonymous

- API key reputation / age

- session age and continuity (new session every request is suspicious)

Behavior

- auth failure ratios (failed logins / total)

- endpoint sequence anomalies (login → export → export → export)

- high-entropy parameter mutation (IDs incrementing, coupon guessing)

- concurrency spikes per user/token

Network + client

- ASN / geo anomalies relative to account history

- user-agent churn patterns

- “headless-ish” header patterns (missing Accept-Language, odd TLS/HTTP2 fingerprints if available)

Endpoint sensitivity

- cost to serve (DB-heavy search vs cached GET)

- business risk (OTP, checkout, account recovery)

Key idea: Risk-driven API throttling is strongest when you can compute risk per (principal, session, endpoint class).

Core pattern: translate risk → decision

Most teams need four outcomes, not one:

- Allow (normal)

- Throttle (soft control: 429 + Retry-After)

- Challenge (step-up: CAPTCHA/OTP/mTLS/proof token)

- Block (hard deny, temporary or permanent)

Risk scoring (simple, explainable)

Here’s a minimal risk scoring approach you can implement today:

# risk_score.py (reference logic)

def risk_score(ctx: dict) -> int:

score = 0

# Identity confidence

if not ctx.get("authenticated"):

score += 15

if ctx.get("api_key_age_days", 999) < 3:

score += 10

# Auth behavior

if ctx.get("auth_fail_ratio", 0.0) > 0.4:

score += 25

# Session continuity

if ctx.get("session_age_seconds", 999999) < 30:

score += 10

# Endpoint sensitivity

score += ctx.get("endpoint_risk", 0) # e.g. login=25, otp=30, export=20, search=10

# Concurrency / burstiness

if ctx.get("concurrent_requests", 0) > 8:

score += 20

return min(score, 100)Decision thresholds

def decision(score: int) -> str:

if score < 25:

return "allow"

if score < 55:

return "throttle"

if score < 80:

return "challenge"

return "block"This is the foundation of risk-driven API throttling: risk determines friction.

Abuse mitigations you can deploy without breaking services

1) Dynamic backoff (adaptive throttling)

Instead of a fixed “429,” increase backoff as risk increases:

// dynamic-backoff.js (Express-style)

function retryAfterSeconds(score) {

// 0..100 -> 1..60 seconds

const s = Math.max(0, Math.min(score, 100));

return Math.ceil(1 + (s / 100) * 59);

}

function throttleResponse(res, score, reason = "throttled") {

const retryAfter = retryAfterSeconds(score);

res.set("Retry-After", String(retryAfter));

return res.status(429).json({

error: "Too Many Requests",

reason,

retry_after_seconds: retryAfter,

});

}Why it works: Legit users naturally slow down; bots often don’t.

2) “Cost-based” throttling (charge more for risky requests)

Instead of counting every request as 1, assign a token cost:

// cost-based-tokens.js

function requestCost({ endpointClass, score }) {

const base = {

public_read: 1,

search: 2,

login: 5,

otp: 6,

export: 8,

payment: 10,

}[endpointClass] ?? 2;

// score adds 0..5 extra cost

const extra = Math.floor(score / 20);

return base + extra;

}This makes abuse expensive while keeping normal usage smooth.

3) CAPTCHA / step-up at risk thresholds (and the “challenge farm” reality)

CAPTCHAs are useful only when:

- you gate them behind risk thresholds (don’t CAPTCHA everyone)

- you accept that challenge farms exist (humans can solve challenges)

So: treat CAPTCHA as a delay + signal, not your only defense. Pair it with:

- session continuity requirements

- proof tokens bound to session/device

- stricter throttles after challenge success if behavior stays abnormal

A simple “proof token” pattern:

// challenge-token.js (concept)

import crypto from "crypto";

const SECRET = process.env.CHALLENGE_SIGNING_SECRET;

export function mintProofToken({ sub, ttlSeconds = 600 }) {

const exp = Math.floor(Date.now() / 1000) + ttlSeconds;

const payload = JSON.stringify({ sub, exp });

const sig = crypto.createHmac("sha256", SECRET).update(payload).digest("hex");

return Buffer.from(`${payload}.${sig}`).toString("base64url");

}

export function verifyProofToken(token) {

const raw = Buffer.from(token, "base64url").toString("utf8");

const [payload, sig] = raw.split(".");

const expected = crypto.createHmac("sha256", SECRET).update(payload).digest("hex");

if (expected !== sig) return null;

const obj = JSON.parse(payload);

if (obj.exp < Math.floor(Date.now() / 1000)) return null;

return obj;

}Integrating with API gateways + WAF for layered protection

Risk-driven API throttling works best as layers:

- Edge/Gateway: coarse traffic shaping + burst control

- App: identity-aware throttling + logic abuse controls

- WAF: known-bad patterns, reputation controls, and cheap blocking

NGINX: baseline per-IP throttling + sensitive route zones

# nginx.conf (reference)

limit_req_zone $binary_remote_addr zone=ip_global:10m rate=30r/s;

limit_req_zone $binary_remote_addr zone=ip_login:10m rate=5r/s;

limit_req_zone $binary_remote_addr zone=ip_search:10m rate=10r/s;

server {

location /api/ {

limit_req zone=ip_global burst=60 nodelay;

}

location = /api/login {

limit_req zone=ip_login burst=10 nodelay;

}

location /api/search {

limit_req zone=ip_search burst=20 nodelay;

}

}App layer: identity-aware throttling with Redis token bucket (atomic)

For real production reliability, avoid in-memory counters. Use Redis + Lua for atomic token bucket updates:

-- redis_token_bucket.lua

-- KEYS[1] = bucket key

-- ARGV[1] = capacity

-- ARGV[2] = refill_per_sec

-- ARGV[3] = now_ms

-- ARGV[4] = cost

local key = KEYS[1]

local capacity = tonumber(ARGV[1])

local refill = tonumber(ARGV[2])

local now = tonumber(ARGV[3])

local cost = tonumber(ARGV[4])

local data = redis.call("HMGET", key, "tokens", "ts")

local tokens = tonumber(data[1])

local ts = tonumber(data[2])

if tokens == nil then tokens = capacity end

if ts == nil then ts = now end

local elapsed = math.max(0, now - ts) / 1000.0

local new_tokens = math.min(capacity, tokens + elapsed * refill)

if new_tokens < cost then

redis.call("HMSET", key, "tokens", new_tokens, "ts", now)

redis.call("EXPIRE", key, 3600)

return {0, new_tokens}

end

new_tokens = new_tokens - cost

redis.call("HMSET", key, "tokens", new_tokens, "ts", now)

redis.call("EXPIRE", key, 3600)

return {1, new_tokens}Node.js wrapper (Express middleware style):

// risk_throttle_mw.js

import { createClient } from "redis";

import fs from "fs";

const redis = createClient({ url: process.env.REDIS_URL });

await redis.connect();

const LUA = fs.readFileSync("./redis_token_bucket.lua", "utf8");

const sha = await redis.scriptLoad(LUA);

function identityKey(req) {

// Prefer stable principal: api key -> user id -> session id -> IP fallback

const apiKey = req.headers["x-api-key"];

const userId = req.user?.id;

const sessionId = req.headers["x-session-id"];

return apiKey ? `k:${apiKey}` : userId ? `u:${userId}` : sessionId ? `s:${sessionId}` : `ip:${req.ip}`;

}

export async function riskDrivenThrottle(req, res, next) {

const endpointClass = req.endpointClass || "public_read";

const ctx = {

authenticated: Boolean(req.user),

api_key_age_days: req.apiKeyAgeDays ?? 999,

auth_fail_ratio: req.authFailRatio ?? 0,

session_age_seconds: req.sessionAgeSeconds ?? 999999,

endpoint_risk: req.endpointRisk ?? 0,

concurrent_requests: req.concurrentRequests ?? 0,

};

const score = req.riskScore(ctx); // plug in your risk scorer

const dec = req.riskDecision(score); // allow/throttle/challenge/block

const cost = req.requestCost({ endpointClass, score });

if (dec === "block") return res.status(403).json({ error: "Forbidden" });

if (dec === "challenge") return res.status(401).json({ error: "Challenge required" });

// Token bucket parameters per endpoint class

const capacity = endpointClass === "login" ? 20 : 60;

const refillPerSec = endpointClass === "login" ? 0.5 : 2.0;

const key = `rt:${endpointClass}:${identityKey(req)}`;

const nowMs = Date.now();

const result = await redis.evalSha(sha, {

keys: [key],

arguments: [String(capacity), String(refillPerSec), String(nowMs), String(cost)],

});

const allowed = Number(result[0]) === 1;

if (!allowed) {

res.set("X-Risk-Score", String(score));

res.set("Retry-After", "5");

return res.status(429).json({ error: "Too Many Requests" });

}

res.set("X-Risk-Score", String(score));

return next();

}This gives you real-time, identity-aware throttling that scales beyond IP-based controls.

Logging for detection and forensic context (don’t skip this)

Without forensics-ready logging, throttling becomes guesswork.

At minimum, log:

- request_id / trace_id

- principal (user/api key), session id

- endpoint class, risk score, decision

- auth success/fail (where relevant)

- response code + latency

- key headers (user-agent, accept-language), without storing secrets

Example structured JSON log record

{

"ts": "2026-02-19T12:34:56.789Z",

"request_id": "8f3c2a...",

"principal": "u:12345",

"session_id": "s:ab12...",

"ip": "203.0.113.10",

"method": "POST",

"path": "/api/login",

"endpoint_class": "login",

"risk_score": 72,

"decision": "throttle",

"status": 429,

"latency_ms": 34,

"ua": "Mozilla/5.0 ..."

}If you’re building an evidence-first posture (recommended), align your logging to DFIR needs:

- https://www.pentesttesting.com/forensic-analysis-services/

- https://www.pentesttesting.com/digital-forensic-analysis-services/

And if you need help fixing the gaps found during assessment/testing:

https://www.pentesttesting.com/remediation-services/

Validation + rollback playbook (safe deployments)

Roll out risk-driven API throttling like a production feature—because it is one.

1) Deploy in “observe” mode first

- compute risk score

- log decision

- don’t enforce yet

2) Add enforcement by endpoint class

Start with:

login,otp,password-reset,export,search

3) Protect with feature flags

- global kill switch

- per-route enforcement toggle

- per-tenant override

4) Monitor the right metrics

- 2xx/4xx/5xx rates per endpoint

- 429 rates split by (tenant, endpoint, auth status)

- login success rate & conversion

- latency (p50/p95/p99)

- support tickets / customer complaints

5) Rollback rules

Rollback immediately if:

- 429s spike for authenticated users

- error budgets burn due to throttling logic

- revenue-critical flows degrade

Free Website Vulnerability Scanner tool Dashboard

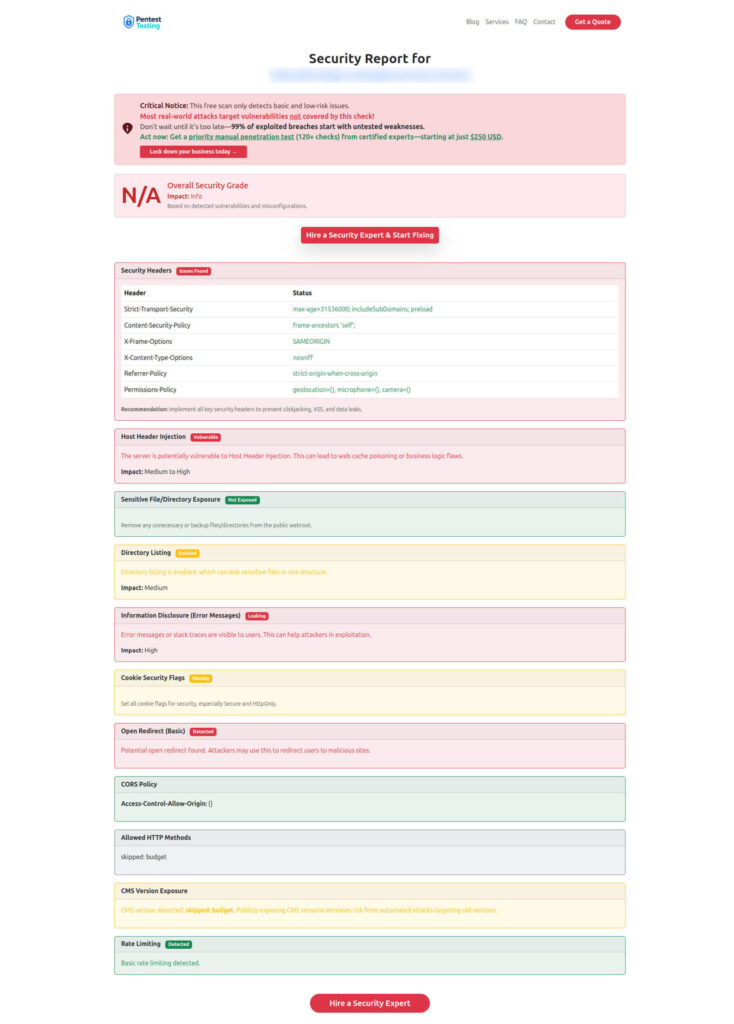

Sample report from the tool to check Website Vulnerability

Related recent reads from our blog

- Webhook hardening patterns (highly relevant to API abuse): https://www.pentesttesting.com/webhook-security-best-practices/

- Forensics-first logging and retention: https://www.pentesttesting.com/forensic-readiness-smb-log-retention/

- Rapid DFIR checklist for high-tempo incidents: https://www.pentesttesting.com/rapid-dfir-checklist-patch-to-proof/

- Post-patch “prove you’re clean” playbook: https://www.pentesttesting.com/post-patch-forensics-playbook-2026/

- Deception strategies that create high-signal alerts: https://www.pentesttesting.com/endpoint-deception-strategies/

Practical next steps

If you want to implement risk-driven API throttling without breaking production, do this in order:

- Classify endpoints by sensitivity (login/otp/export/search/payment).

- Add observe-mode risk scoring + structured logs.

- Enforce token bucket throttling per identity (Redis-based).

- Add step-up challenges for mid-risk.

- Review outcomes weekly and tune thresholds based on evidence.

For a professional review/testing of your current API controls:

https://www.pentesttesting.com/api-pentest-testing-services/

For prioritized fixes and hardening support:

https://www.pentesttesting.com/remediation-services/

For a program-level gap roadmap:

https://www.pentesttesting.com/risk-assessment-services/

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about Risk-Driven API Throttling Tactics.