7 Proven Steps for CMMC Level 2 Remediation (2025)

Why this matters now

CMMC Level 2 is entering phased rollout in 2025. The winners will be teams that fix fast, collect evidence as they go, and make their configurations ODP-ready—so assessors can see that your policies and technical settings actually match what you’ve defined. This guide gives you a hands-on, code-heavy approach to get there with an 800-171r3 + ODP lens and an audit-grade evidence trail your C3PAO reviewer can follow.

Looking for a fast path to board reporting? Read our NIST CSF 2.0 14-Day Board-Ready Metrics Plan!

Quick start: Run an external exposure sweep with our Website Vulnerability Scanner Online Free, then convert exploitable items into Level-2 backlog tickets.

What “ODP-ready” really means (in practice)

Organizationally Defined Parameters (ODPs) are your chosen values for controls (e.g., session timeout = 15 minutes; log retention = 365 days). “ODP-ready” means:

- You’ve chosen concrete values that fit your risk profile.

- Your configs/code enforce those values.

- You’ve captured artifacts—configs, PRs, deployment logs, SIEM settings, and retest screenshots—to prove it.

Below are 7 proven steps to apply ODPs, map to 800-171r3, and produce C3PAO-friendly evidence.

Step 1 — Declare your ODPs (source of truth)

Create a single, version-controlled file to anchor your parameters.

# odps.yaml (NIST 800-171r3 flavored)

session:

idle_timeout_seconds: 900 # AC-12-ish parameter (example)

absolute_timeout_minutes: 480

auth:

jwt_exp_minutes: 15

mfa_required: true

logging:

retention_days: 365

time_sync: 'NTP: pool.ntp.org'

network:

tls_min_version: '1.2'

hsts_max_age_seconds: 31536000

backups:

frequency_hours: 24

retention_days: 30

user_access:

review_frequency_days: 90

disable_inactive_days: 30Tip: Reference this file from policy, IaC, pipelines, and acceptance tests so a single change updates everything consistently.

Step 2 — Enforce session timeouts everywhere

Windows (machine inactivity limit)

# Run as admin: set 15-minute inactivity logoff

New-Item -Path "HKLM:\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System" -Force | Out-Null

New-ItemProperty -Path "HKLM:\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System" `

-Name "InactivityTimeoutSecs" -PropertyType DWord -Value 900 -Force | Out-Null

gpupdate /forceLinux shells (auto-logout on idle)

# /etc/profile.d/odps.sh

export TMOUT=900

readonly TMOUTSSH idle timeout

# /etc/ssh/sshd_config

ClientAliveInterval 300

ClientAliveCountMax 3 # 5 min * 3 = 15 min

systemctl restart sshdWeb app (JWT expiry, Node.js/Express)

// ODP: jwt_exp_minutes = 15

const jwt = require("jsonwebtoken");

const exp = Math.floor(Date.now() / 1000) + (15 * 60);

const token = jwt.sign({ sub: user.id, exp }, process.env.JWT_SECRET, { algorithm: "HS256" });Step 3 — Make log retention & time sync provable

AWS CloudTrail + S3 lifecycle (Terraform)

variable "retention_days" { default = 365 }

resource "aws_s3_bucket_lifecycle_configuration" "logs" {

bucket = aws_s3_bucket.trail_logs.id

rule {

id = "trail-retention"

status = "Enabled"

expiration { days = var.retention_days }

}

}

resource "aws_cloudwatch_log_group" "trail" {

name = "/aws/cloudtrail/org"

retention_in_days = var.retention_days

}Azure Log Analytics (CLI)

# Set workspace retention to 365 days

az monitor log-analytics workspace update \

-g MyRG -n MyLAW --retention-time 365NTP on Linux

timedatectl set-ntp true

timedatectl status | grep "System clock synchronized"Evidence to capture: Terraform plan/apply output, Azure CLI command logs,

timedatectl status, and screenshots of the console settings.

Step 4 — Lock down TLS & headers (network ODPs)

Nginx TLS and HSTS

ssl_protocols TLSv1.2 TLSv1.3;

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Content-Type-Options "nosniff" always;

add_header X-Frame-Options "DENY" always;Apache

SSLProtocol all -SSLv3 -TLSv1 -TLSv1.1

Header always set Strict-Transport-Security "max-age=31536000; includeSubDomains"

Header always set X-Content-Type-Options "nosniff"

Header always set X-Frame-Options "DENY"Evidence: vhost files, a PR with diffs, and a post-deployment scan result attached to the ticket.

Step 5 — Enforce access reviews & deprovisioning

Disable inactive users (example: Linux + SSO directory export)

# Deactivate local accounts not seen in last 30 days

while IFS=: read -r user _ uid gid _ home shell; do

[ "$uid" -ge 1000 ] || continue

last_login=$(lastlog -u "$user" | awk 'NR==2{print $4,$5,$6}')

if [[ "$(date -d "$last_login" +%s)" -lt "$(date -d '30 days ago' +%s)" ]]; then

sudo usermod --lock "$user"

fi

done </etc/passwdQuarterly access review reminder (GitHub Actions)

name: Quarterly Access Review

on:

schedule:

- cron: "0 9 1 */3 *" # 9:00 on the 1st, every 3rd month

jobs:

notify:

runs-on: ubuntu-latest

steps:

- name: Post Slack reminder

run: |

curl -X POST -H 'Content-type: application/json' \

--data '{"text":"ODP: Access review due this week. Export and attest."}' $SLACK_WEBHOOKStep 6 — Turn every fix into C3PAO-ready evidence

Automated “evidence bundle” collector

#!/usr/bin/env bash

set -euo pipefail

STAMP=$(date +%Y%m%d-%H%M%S)

DEST="evidence/$STAMP"

mkdir -p "$DEST"

# Capture configs

cp /etc/ssh/sshd_config "$DEST/"

cp /etc/nginx/nginx.conf "$DEST/" 2>/dev/null || true

timedatectl status > "$DEST/timedatectl.txt"

# Save command proofs

echo "az monitor log-analytics workspace show ..." > "$DEST/commands.txt"

# Hash everything

( cd "$DEST" && sha256sum * > SHA256SUMS.txt )

zip -r "evidence-bundle-$STAMP.zip" "$DEST"

echo "Evidence bundle created: evidence-bundle-$STAMP.zip"Redact PII in logs before sharing (Python)

import re, sys

pii = re.compile(r"(\b\d{3}-\d{2}-\d{4}\b|\b\d{16}\b|[\w\.-]+@[\w\.-]+)")

for line in sys.stdin:

print(pii.sub("[REDACTED]", line), end="")Attach the ZIP and sanitized logs to your ticket; link the PR and deployment job run. That’s the artifact chain assessors expect.

Step 7 — Validate, retest, and show “before/after”

Use a short CI/CD job to run a post-change web exposure check and store artifacts.

name: Post-Change Exposure Check

on: [workflow_dispatch]

jobs:

scan:

runs-on: ubuntu-latest

steps:

- name: Run external sweep

run: |

# Document the check; store raw results + HTML report artifact

curl -s https://free.pentesttesting.com/ > scan-landing.html

echo "Manual step: run site scan in browser & upload report to artifacts/"

- uses: actions/upload-artifact@v4

with:

name: exposure-check

path: |

scan-landing.html

artifacts/**Free Website Vulnerability Scanner — Landing page screenshot

30/60/90-day CMMC L2 remediation plan (owner + SLA)

| Window | Focus | Examples | Evidence you’ll keep |

|---|---|---|---|

| Day 0–30 | High-impact ODPs | Session/JWT timeouts; TLS/HSTS; SSH/Windows idle | Configs, PRs, deploy logs, header scans |

| Day 31–60 | Logging & monitoring | 365-day retention; time sync; alerting rules | IaC plans, SIEM screenshots, alert tests |

| Day 61–90 | Access & resilience | Quarterly reviews; deprovisioning; backup tests | Review sign-offs, disablement logs, restore proof |

Map findings to 800-171r3 controls (working model)

# controls-map.yaml (excerpt)

AC-12: # Session termination

odp: session.idle_timeout_seconds

evidence:

- /etc/ssh/sshd_config

- group-policy/registry-exports

- web/jwt-config.md

AU-11: # Retention

odp: logging.retention_days

evidence:

- terraform/cloudtrail.tf

- az/log-analytics-retention.txt

SC-13: # Cryptographic protection

odp: network.tls_min_version

evidence:

- nginx/nginx.conf

- apache/ssl.conf

IA-2: # MFA

odp: auth.mfa_required

evidence:

- idp/policy-export.jsonAdd “proof of fix” checks your assessors will love

KQL (Azure/M365) — suspicious admin actions in the last 24h

AuditLogs

| where TimeGenerated > ago(24h)

| where ResultType == "Success"

| where OperationName has_any ("Add service principal", "Update app role assignment", "Reset user password")

| project TimeGenerated, OperationName, InitiatedBy, TargetResources, ResultDescriptionSQL — Who changed access last quarter?

SELECT actor, action, target, ts

FROM access_change_audit

WHERE ts >= DATE_TRUNC('quarter', NOW()) - INTERVAL '1 quarter';CI guardrail — block merges if ODPs drift

- name: Verify ODP drift

run: |

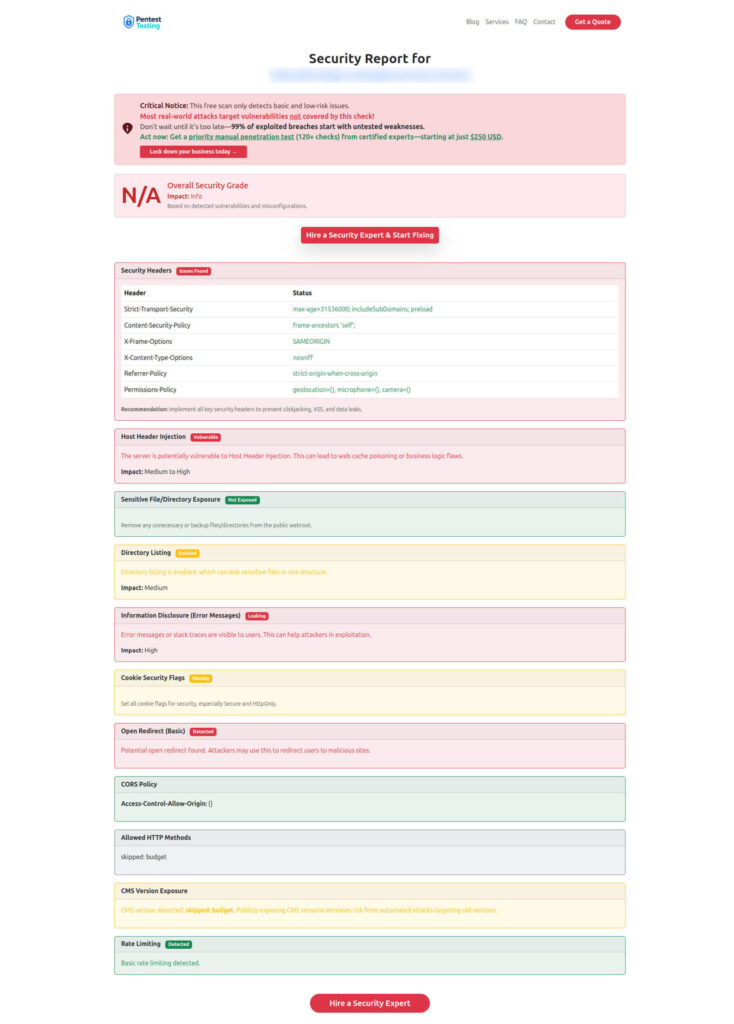

jq -r '.session.idle_timeout_seconds' odps.json | grep -qx '900'Sample Report Screenshot to check Website Vulnerability — After a scan

Related services (fastest path to done)

- Risk Assessment Services — scope, triage, and prioritize your Level-2 backlog.

https://www.pentesttesting.com/risk-assessment-services/ - Remediation Services — hands-on fixes, IaC/PRs, and evidence packaging for audits.

https://www.pentesttesting.com/remediation-services/

Recently on our blog (keep learning)

- DORA TLPT 2025: 7 Powerful Moves to Fix First — a practical, fix-first approach that aligns well with evidence-driven remediation. https://www.pentesttesting.com/dora-tlpt-2025/

- ASVS 5.0 Remediation: 12 Battle-Tested Fixes — great patterns you can reuse in CMMC workstreams. https://www.pentesttesting.com/asvs-5-0-remediation/

- Android Security Bulletin October 2025: Fleet Triage — monthly patterns for triage and artifact capture. https://www.pentesttesting.com/android-security-bulletin-october-2025/

- Explore more posts on the Pentest Testing Corp Blog. https://www.pentesttesting.com/blog/

Final Note

Need a CMMC Level 2 remediation partner who ships fixes and artifacts?

👉 Start with a quick consult via our Risk Assessment or Remediation pages, or email [email protected].

P.S. If you want a “done-for-you” plan, we’ll lift your ODPs into IaC, wire the pipelines, and hand you a clean CMMC Level 2 remediation evidence bundle—ready for review.

Next up: Harden your API/JSON endpoints against XSSI in OpenCart with our 5-step playbook →

https://www.pentesttesting.com/prevent-xssi-attack-in-opencart/

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about CMMC Level 2 Remediation.