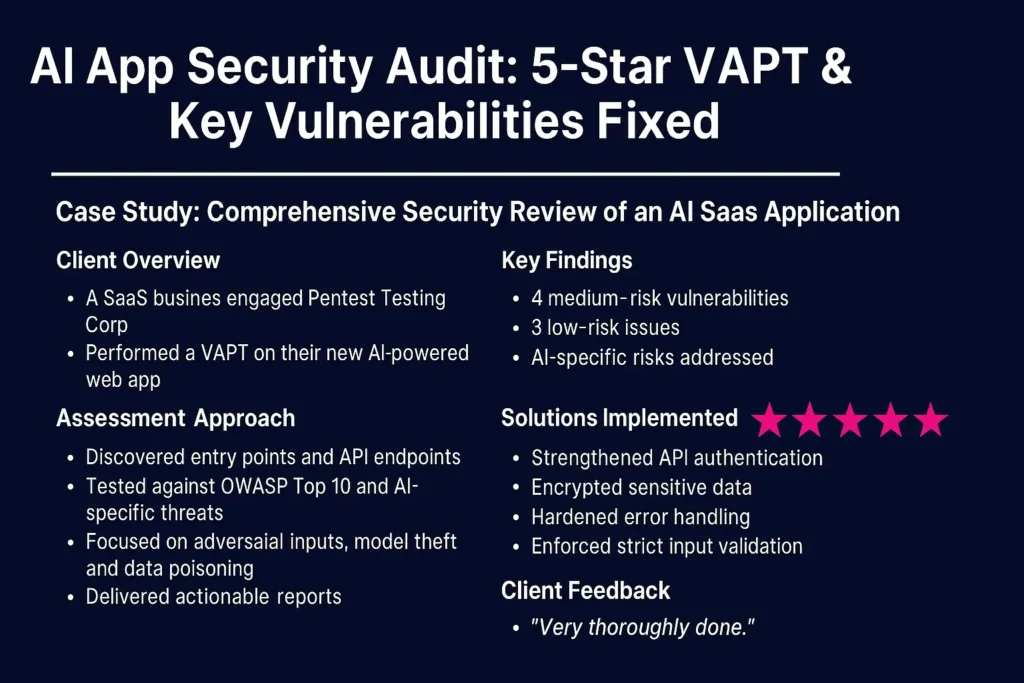

Case Study: AI App Security Audit—Risks Found & Remediated

Client Background

A SaaS company in Marion, United States, partnered with Pentest Testing Corp to perform a comprehensive security review of their newly launched AI-driven web application. Their primary objective: identify and resolve vulnerabilities before launch.

Assessment Methodology

- Reconnaissance & Enumeration

Mapped all app endpoints, APIs, and model-serving interfaces for risk exposure. - Vulnerability Scanning & Penetration Testing

Targeted OWASP Top 10 threats, insecure authentication, prompt injection, adversarial attacks, and API flaws. - AI-Specific Security Checks

Tested for AI-related issues including model theft, data poisoning, input manipulation, and privacy risks. - Reporting & Remediation

Delivered a prioritized, actionable report with remediation guidance.

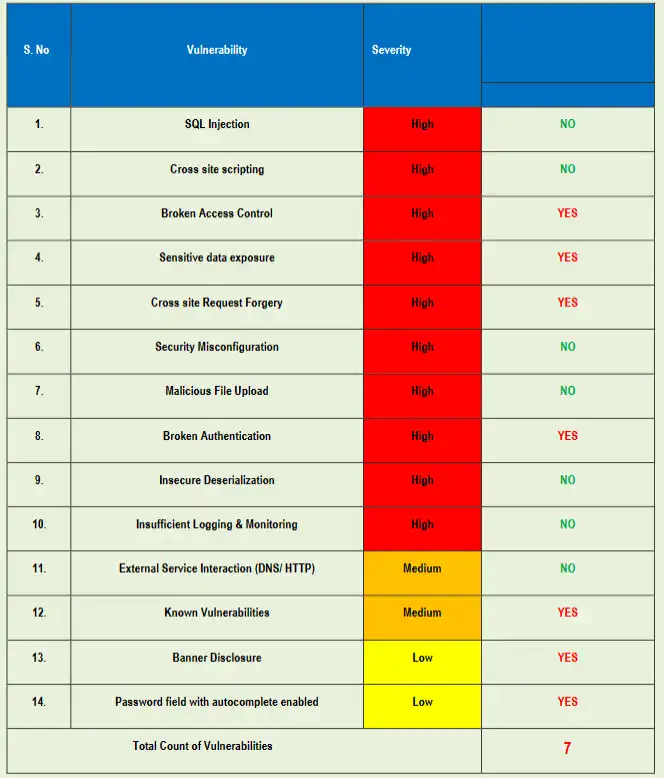

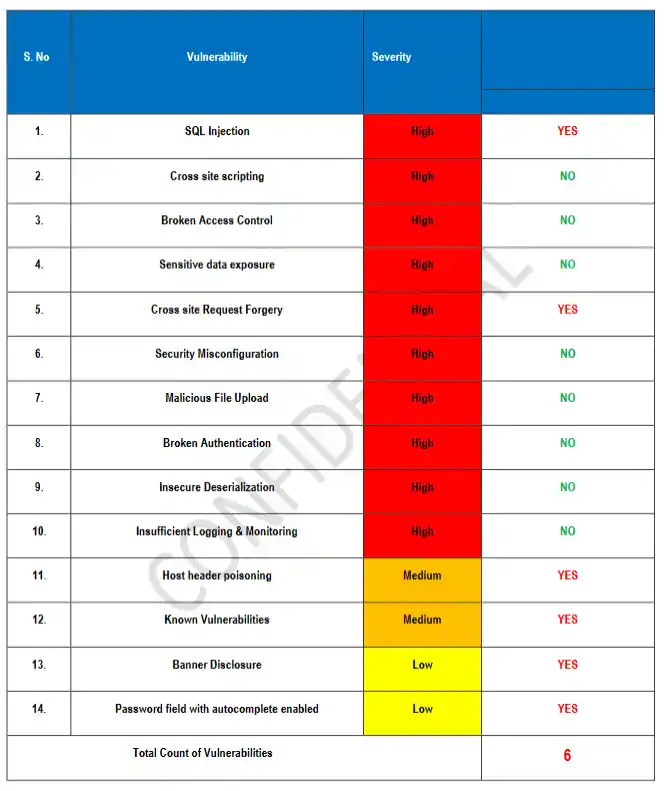

Key Security Issues Identified

1. Weak API Authentication

APIs for model inference and data exchange lacked strong authentication, risking unauthorized access.

Solution:

Deployed secure token-based API authentication, enabled rate limiting, and implemented detailed access logging.

2. Information Disclosure

Error messages and debug output revealed sensitive app internals.

Solution:

Configured generic error handling to prevent information leaks.

3. Insufficient Input Validation / Prompt Injection

AI endpoints were susceptible to prompt injection and malformed input, potentially manipulating outputs.

Solution:

Applied strict input validation, output encoding, and detection of suspicious queries.

4. Adversarial Input Risks

Model responses could be manipulated using crafted adversarial queries.

Solution:

Integrated adversarial input filters, enhanced model robustness, and enabled monitoring for anomalous activity.

5. Data Poisoning Threats

Risk of model compromise from tampered or malicious training data.

Solution:

Recommended secure data pipelines, integrity checks, and regular model validation.

6. Insecure Data Storage

Sensitive data lacked adequate encryption and access controls.

Solution:

Implemented encryption at rest and in transit, with strict permission controls.

7. Lack of Security Monitoring

No monitoring or alerting for suspicious or unauthorized actions.

Solution:

Established real-time security monitoring and defined incident response procedures.

Results & Client Feedback of the AI App Security Audit

The client received a detailed, prioritized remediation roadmap and implemented fixes with our guidance. They gave a 5-star review, praising the depth and clarity of the testing:

“Very thoroughly done.”

Why AI Applications Need Specialized Security

- Prompt injection and manipulation can leak data or control outputs

- Model theft threatens intellectual property

- Adversarial and poisoned inputs degrade trust and reliability

- Sensitive data exposure increases privacy and compliance risks

Proactive security assessment and remediation are vital.

Explore our AI Application Cybersecurity Services

Did you know? We now offer Managed IT Services—a comprehensive monthly solution for hosting, helpdesk, and IT security, all in one package.

Take Action Now

- Want to secure your app?

Get a free security check

or

Partner with us to offer cybersecurity to your clients

Discover More Case Studies

Explore our latest success stories and insights in application security. Check out our recent case study on a real-world security engagement on our blog for more details on how we help businesses stay secure.

Visit Our Partner Site: Cybersrely.com

For even more cybersecurity resources, industry news, and advanced solutions, visit our sister site Cybersrely.com and stay ahead of cyber threats in today’s digital landscape.

Stay informed:

Read our recent blog post on Weak API Authentication in Laravel for up-to-date security trends, technical guidance, and expert case studies.

Contact Pentest Testing Corp today to protect your AI-powered business from evolving cyber threats.