7 Powerful AI Cloud Security Risks Pentests Miss

AI features in the cloud (LLM copilots, agent workflows, RAG pipelines, managed AI services) are expanding attack surfaces faster than most security programs can scope. The problem isn’t that penetration testing is outdated—it’s that many “traditional” pentests stop at app endpoints and miss AI cloud security risks rooted in cloud identity, control-plane authorization, and agent tool execution.

This guide shows how penetration testing must evolve to assess AI-augmented cloud threat vectors—without turning the engagement into an endless cloud audit. It includes practical, real-world checks and code examples you can run in authorized environments.

For a step-by-step approach to SEC cyber disclosure, including a ready-to-use 8-K evidence pack structure and materiality workflow, see our SEC cyber disclosure 8-K playbook.

The 6 most common AI cloud security risks we see

| Risk vector | What to validate in a pentest | Practical fix |

|---|---|---|

| Over-privileged non-human identities (NHI) | Effective permissions, role chaining, token exposure, workload identity paths | Least privilege, scoped trust, deny wildcards, rotate/limit tokens |

| Misconfigured AI service APIs | AuthZ, network exposure, endpoint permissions, logging, rate controls | Private endpoints, IAM conditions, per-tenant AuthZ, audit trails |

| Agent tool/function abuse | Allowlist enforcement, per-action AuthZ, schema validation | Deny-by-default tool gate, strict schemas, safe parameterization |

| RAG/vector-store data leakage | Index access controls, object storage policies, tenant isolation | Prefix allowlists, namespaces, encryption, query controls |

| Secrets in prompts/logs/traces | Data flows, retention, export paths, console access | Redaction, tight retention, least-priv console roles, DLP rules |

| CI/CD + IaC misconfigs powering AI | Unsafe defaults, permissive modules, missing policy gates | Policy-as-code in CI, secure modules, mandatory reviews |

What changed with AI in the cloud

AI workloads introduce new risk vectors that classic scopes often under-test:

- Non-human identity sprawl: service accounts, IAM roles, managed identities, workload identities, API keys, and short-lived tokens powering AI pipelines.

- New control-plane APIs: managed AI services, model endpoints, vector databases, and data connectors—each with its own permissions and logging.

- Agent toolchains: LLMs calling tools (functions) to query systems, deploy infra, run workflows, or touch sensitive business processes.

- RAG data exposure: embeddings + retrieval corpora + object storage misconfigs can leak “private context.”

- Prompt injection meets permissions: indirect prompt injection + confused-deputy behavior becomes dangerous when agents have write access.

- Telemetry/training data risk: prompts, outputs, and traces stored in logs/data lakes/vendor consoles—often outside expected retention/compliance controls.

Why traditional pentests miss AI cloud security risks

Traditional penetration testing is usually optimized for web endpoints and classic vulnerability classes. It can miss AI cloud security risks when:

- Scope is limited to “what’s on the internet” and ignores cloud control-plane permissions, IaC, and identity graphs.

- Findings stop at “vulnerability exists” without validating cloud blast radius (what a compromised identity can access).

- Non-human identities are treated as implementation details instead of prime attack targets.

- Agentic AI is tested as a chatbot UI, not as a privileged automation layer with tools + secrets + data connectors.

- RAG pipelines are tested for availability/accuracy, not confidentiality boundaries and tenant isolation.

Modernize the scope: what to add to your next engagement

To reliably identify AI cloud security risks, expand scope to include:

- AI asset inventory: model endpoints, vector stores, prompt logs, connectors, orchestration.

- Non-human identity (NHI) inventory: service accounts, IAM roles, managed identities, workload identity bindings.

- Permission graph testing: assume-role/impersonation chains, secret read paths, decrypt paths, cross-env pivots.

- AI-specific abuse paths: direct + indirect prompt injection, tool/function misuse, RAG data exfiltration.

- Exploit-proof impact: safe validation that maps misconfigs to real outcomes (then stop at minimal evidence).

- Compliance mapping: convert findings into audit-ready evidence + remediation plan.

If you need help scoping and executing these engagements:

- Cloud Pentest Testing: https://www.pentesttesting.com/cloud-pentest-testing/

- Cybersecurity for AI Application: https://www.pentesttesting.com/ai-application-cybersecurity/

- API Pentest Testing: https://www.pentesttesting.com/api-pentest-testing-services/

- Risk Assessment Services: https://www.pentesttesting.com/risk-assessment-services/

- Remediation Services: https://www.pentesttesting.com/remediation-services/

A practical pentest workflow for AI cloud security risks

Step 1) Build an AI + non-human identity inventory

Start with an inventory that’s testable and repeatable. You don’t need a perfect CMDB—you need a living list of what can be attacked.

# ai_cloud_inventory.yaml

- id: AI-CLOUD-APP-001

name: Support Copilot

environments: [prod, staging]

ai_services: ["model-endpoint", "vector-store", "tool-runner"]

data_domains: ["tickets", "customer-notes", "PII-light"]

non_human_identities:

- type: iam_role

name: support-copilot-prod-role

- type: service_account

name: [email protected]

owners: ["Security", "Engineering"]Auto-discover AI usage in repos (quick helper)

# discover_ai_usage.py

from pathlib import Path

import re

PATTERNS = [

r"/v1/chat/completions",

r"openai\.",

r"vertexai\.generative",

r"bedrock",

r"azure\.openai",

r"vector",

r"embedding",

r"tool_call",

]

def scan(root="."):

hits = []

for p in Path(root).rglob("*.*"):

if p.suffix.lower() not in {".py",".js",".ts",".java",".go",".cs"}:

continue

text = p.read_text(encoding="utf-8", errors="ignore")

for pat in PATTERNS:

if re.search(pat, text, re.IGNORECASE):

hits.append((str(p), pat))

break

return hits

if __name__ == "__main__":

for f, pat in scan("."):

print(f"[AI] {f} (matched {pat})")Step 2) Find cloud identity misconfigurations (the “real” AI risk layer)

Non-human identity security is the highest-ROI area in AI risk penetration testing. Prioritize:

- Wildcard actions/resources (

*,service:*) - Role chaining: AssumeRole / impersonation / PassRole paths

- Secret read and decrypt permissions (Secrets Manager / Key Vault / Secret Manager + KMS/Key Vault keys)

- Broad access to RAG corpora storage, vector indexes, data lakes, snapshots

AWS: simulate blast radius for sensitive actions

# Authorized account only

aws iam simulate-principal-policy \

--policy-source-arn arn:aws:iam::<ACCOUNT_ID>:role/<ROLE_NAME> \

--action-names iam:PassRole sts:AssumeRole kms:Decrypt s3:GetObject secretsmanager:GetSecretValue \

--output tablePolicy red-flag scanner (defensive)

# iam_policy_red_flags.py

import json

import glob

SUSPICIOUS_ACTIONS = {

"iam:PassRole",

"sts:AssumeRole",

"sts:AssumeRoleWithWebIdentity",

"kms:Decrypt",

"secretsmanager:GetSecretValue",

"ssm:GetParameter",

"ssm:GetParameters",

}

def is_wildcard(value: str) -> bool:

return value == "*" or value.endswith(":*")

def scan_statement(stmt: dict) -> list[str]:

flags = []

actions = stmt.get("Action") or stmt.get("NotAction") or []

resources = stmt.get("Resource") or []

if isinstance(actions, str): actions = [actions]

if isinstance(resources, str): resources = [resources]

if any(is_wildcard(a) for a in actions):

flags.append("WILDCARD_ACTION")

if any(r == "*" for r in resources):

flags.append("WILDCARD_RESOURCE")

if any(a in SUSPICIOUS_ACTIONS for a in actions):

flags.append("SENSITIVE_ACTION")

return flags

def scan_policy(path: str) -> list[dict]:

data = json.load(open(path, "r", encoding="utf-8"))

stmts = data.get("Statement", [])

if isinstance(stmts, dict): stmts = [stmts]

findings = []

for i, s in enumerate(stmts):

flags = scan_statement(s)

if flags:

findings.append({"file": path, "statement_index": i, "flags": flags, "effect": s.get("Effect")})

return findings

if __name__ == "__main__":

all_findings = []

for f in glob.glob("**/*.json", recursive=True):

try:

all_findings.extend(scan_policy(f))

except Exception:

pass

for f in all_findings:

print(f"{f['file']} stmt#{f['statement_index']}: {', '.join(f['flags'])} (Effect={f['effect']})")GCP: flag high-priv bindings on service accounts

# gcp_high_priv_sa.py

import json, sys

policy = json.load(sys.stdin) # pipe: gcloud projects get-iam-policy <PROJECT_ID> --format=json

HIGH = {"roles/owner", "roles/editor"}

for b in policy.get("bindings", []):

if b.get("role") in HIGH:

for m in b.get("members", []):

if m.startswith("serviceAccount:"):

print(f"[HIGH] {b['role']} -> {m}")Azure: spot service principals with broad roles

az role assignment list --all \

--query "[?principalType=='ServicePrincipal'].{sp:principalName, role:roleDefinitionName, scope:scope}" \

--output tableStep 3) Test LLM-backed abuse paths (tool/function misuse)

Agents turn prompts into actions. Treat tool execution as an authorization problem, not a prompt problem.

Anti-pattern

# BAD: user text can steer tool invocation

def handle_user_message(user_text, agent):

tool_name, args = agent.decide_tool(user_text)

return agent.run_tool(tool_name, **args)Safer pattern: deny-by-default allowlist + schema validation + per-action authZ

# safer_tool_gate.py

from pydantic import BaseModel, ValidationError

ALLOWED_TOOLS = {"search_kb", "create_ticket", "read_status"} # deny-by-default

class CreateTicketArgs(BaseModel):

title: str

severity: str

system: str

def authorize(user, tool_name: str) -> bool:

if tool_name == "create_ticket":

return user.role in {"secops", "admin"}

return True

def run_tool_safely(user, agent, tool_name: str, args: dict):

if tool_name not in ALLOWED_TOOLS:

raise PermissionError("Tool not allowed")

if not authorize(user, tool_name):

raise PermissionError("User not authorized for this tool")

if tool_name == "create_ticket":

try:

args = CreateTicketArgs(**args).dict()

except ValidationError as e:

raise ValueError(f"Invalid args: {e}")

return agent.run_tool(tool_name, **args)Add structured audit logging for tool calls

# tool_audit_log.py

import json, time, uuid

def log_tool_call(user_id, tool_name, args, decision, reason=None):

event = {

"event_id": str(uuid.uuid4()),

"ts": int(time.time()),

"user_id": user_id,

"tool": tool_name,

"args": args,

"decision": decision, # allow/deny

"reason": reason,

}

print(json.dumps(event)) # send to your log pipelineStep 4) Integrate risk data into real exploit scenarios (safely)

Stakeholders care about impact. The best pentests prove that an AI cloud security risk can become real compromise—then stop at minimal evidence.

Example abuse-path pattern (anonymized):

- A CI runner identity or AI job role has overly broad permissions (wildcards + PassRole).

- A foothold is gained (misconfigured workflow / exposed token / weak service endpoint).

- The attacker assumes a higher-priv role and reaches sensitive storage (object store, snapshots, vector index).

- A small approved sample proves impact; the test stops.

CloudTrail/Athena query idea to detect privilege operations

-- Adjust table/JSON parsing to your CloudTrail schema

SELECT

eventtime,

useridentity.arn AS actor,

eventsource,

eventname,

sourceipaddress

FROM cloudtrail_logs

WHERE eventsource IN ('iam.amazonaws.com','sts.amazonaws.com','kms.amazonaws.com')

AND eventname IN ('PassRole','AssumeRole','CreatePolicyVersion','PutRolePolicy','Decrypt')

ORDER BY eventtime DESC

LIMIT 200;Example S3 bucket policy hardening for RAG corpora (prefix allowlist)

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowRAGIngestRolePrefixOnly",

"Effect": "Allow",

"Principal": { "AWS": "arn:aws:iam::<ACCOUNT_ID>:role/rag-ingest-role" },

"Action": ["s3:GetObject","s3:PutObject","s3:ListBucket"],

"Resource": [

"arn:aws:s3:::rag-corpus-bucket",

"arn:aws:s3:::rag-corpus-bucket/tenant-a/*"

],

"Condition": {

"StringLike": { "s3:prefix": ["tenant-a/*"] }

}

}

]

}Step 5) Map findings back to compliance and audit evidence

AI cloud security risks are easier to fix when findings map to controls, owners, and measurable remediation steps.

# example_finding.yaml

finding_id: AI-CLOUD-001

title: Over-privileged non-human identity enables data access pivot

risk: High

asset: "AI batch job role (prod)"

vector: "cloud identity misconfiguration"

evidence:

- "Role policy allows sts:AssumeRole and kms:Decrypt with broad resources"

- "Role can access sensitive bucket prefix used by RAG ingestion"

impact: "Confidential data exposure via assumed role pivot"

recommendations:

- "Reduce IAM actions to least privilege (deny wildcards)"

- "Restrict AssumeRole targets with conditions (ExternalId, PrincipalArn, tags)"

- "Enforce KMS key policies and bucket policies (prefix allowlist)"

- "Add CI policy-as-code gate to block wildcards in IAM"

retest: "Verify removed access and attempt pivot again in staging"

owner: "Cloud Security"

due_date: "30 days"To connect pentest findings to audit-ready evidence and fix-first execution:

- Risk Assessment Services: https://www.pentesttesting.com/risk-assessment-services/

- Remediation Services: https://www.pentesttesting.com/remediation-services/

Real-time solution: prevent AI cloud security risks from returning

One-off testing isn’t enough for fast AI releases. Pair modern pentests with policy-as-code gates in CI/CD.

Block wildcard IAM actions in Terraform (OPA/Conftest)

# policy/deny_wildcards.rego

package terraform.security

deny[msg] {

input.resource_changes[_].type == "aws_iam_policy"

some s

stmt := input.resource_changes[_].change.after.policy.Statement[s]

action := stmt.Action[_]

action == "*"

msg := "IAM policy contains wildcard Action '*'"

}

deny[msg] {

input.resource_changes[_].type == "aws_iam_policy"

some s

stmt := input.resource_changes[_].change.after.policy.Statement[s]

resource := stmt.Resource[_]

resource == "*"

msg := "IAM policy contains wildcard Resource '*'"

}# .github/workflows/iac-policy-check.yml

name: IaC policy check

on: [pull_request]

jobs:

conftest:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Install conftest

run: |

curl -L -o conftest.tar.gz https://github.com/open-policy-agent/conftest/releases/latest/download/conftest_0.55.0_Linux_x86_64.tar.gz

tar -xzf conftest.tar.gz conftest

sudo mv conftest /usr/local/bin/

- name: Terraform plan (example)

run: terraform show -json plan.out > plan.json

- name: Run policy checks

run: conftest test plan.json -p policy/Case example (anonymized): AI-enabled misconfig + exploitation pattern

Pattern: A support copilot can run a “report” tool. The agent runs under a role that can decrypt too many keys and read from a shared data lake used for RAG. An attacker uses indirect prompt injection (e.g., instructions hidden in an attachment) to coerce a report run with attacker-controlled parameters.

What a modern pentest validates (and fixes):

- Tool gate is deny-by-default with per-action authorization + strict schema validation.

- Agent identity is least-priv and cannot read raw data lake objects (only tenant-scoped prefix).

- KMS/key policies prevent decrypt outside intended principals.

- Logs prove who triggered the tool call, what was accessed, and what was returned.

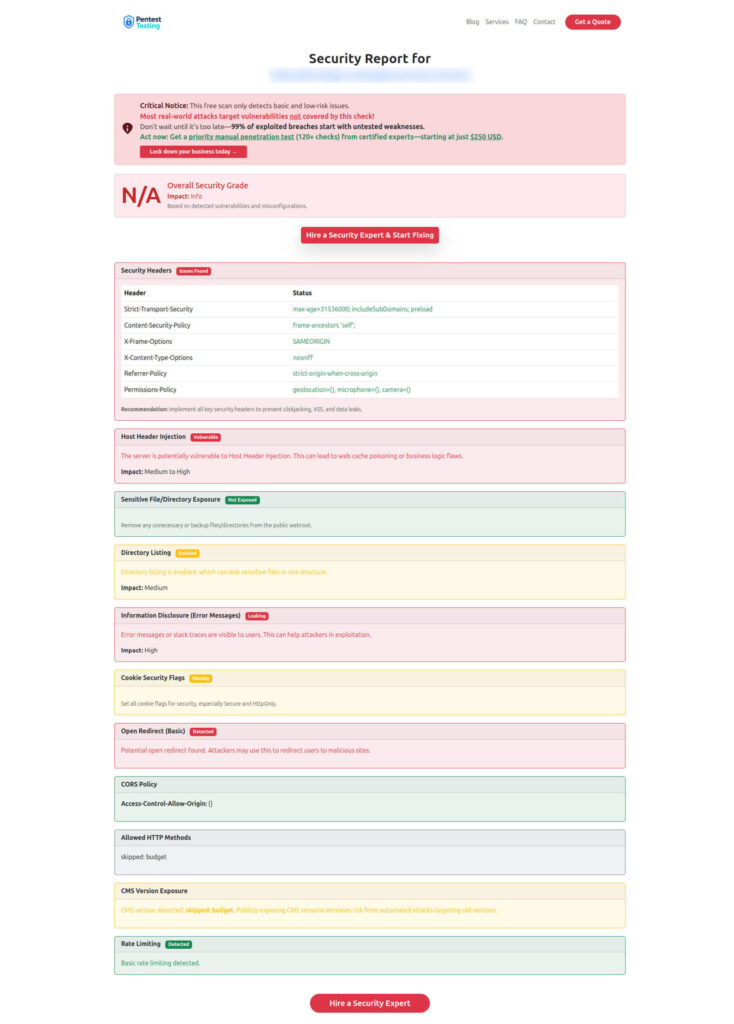

Add a quick baseline scan with our free tool (screenshots)

Even when AI cloud security risks are the main focus, classic web vulnerabilities still create footholds attackers chain into cloud identity and AI abuse paths.

Free Website Vulnerability Scanner: https://free.pentesttesting.com/

Free Website Vulnerability Scanner tool Dashboard

Sample assessment report to check Website Vulnerability

Next steps: evolve engagements, scope for future threats

If you’re adopting AI in cloud services, treat AI cloud security risks as first-class pentest targets: identity, data paths, AI service APIs, agent tool safety, and RAG confidentiality boundaries.

Modernize your security testing to cover cloud AI risk surfaces:

- Learn more: https://www.pentesttesting.com/

- Explore insights: https://www.pentesttesting.com/blog/

- Tie risk findings to mitigation: https://www.pentesttesting.com/risk-assessment-services/ and https://www.pentesttesting.com/remediation-services/

Related reading from our blog

- https://www.pentesttesting.com/ai-red-teaming-steps/

- https://www.pentesttesting.com/eu-ai-act-soc-2/

- https://www.pentesttesting.com/hipaa-ai-risk-assessment-sprint/

- https://www.pentesttesting.com/ai-voice-fraud-and-deepfake-payments/

- https://www.pentesttesting.com/multi-tenant-saas-breach-containment/

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about AI Cloud Security Risks.