EU Data Act Remediation: 60-Day Rapid Plan

Why this matters now

The EU Data Act has been applied since 12 September 2025—and enforcement expectations will only rise as the connected-product scope under Article 3(1) kicks in on 12 September 2026. If you run data-sharing APIs, rely on cloud/edge providers, or ship connected products, the clock is already ticking. This 60-day EU Data Act remediation plan shows how to harden data-sharing API security, prepare cloud switching compliance, and assemble an evidence pack that stands up during due diligence and audits.

Planning DoD work in 2025? Read our CMMC Level 2 remediation playbook: CMMC L2 in 2025: ODP-Ready Remediation Plan.

Who’s impacted & when (quick recap)

- Data holders expose data via APIs to users or third parties.

- Cloud and edge providers are expected to support fair switching and portability.

- Connected-product makers & related services (with Article 3(1) product scope applying from 12 Sept 2026).

If that’s you, the next 60 days are for eliminating “known-unknowns,” raising control maturity, and proving it with artifacts.

Your 60-Day EU Data Act Remediation Plan (audit-ready)

Day 0–5: Baseline & scope

- Inventory all data-sharing API endpoints and users (first/third party).

- Map data categories, purposes, consent/contractual bases, and tenants.

- Identify current cloud regions/services and exit constraints.

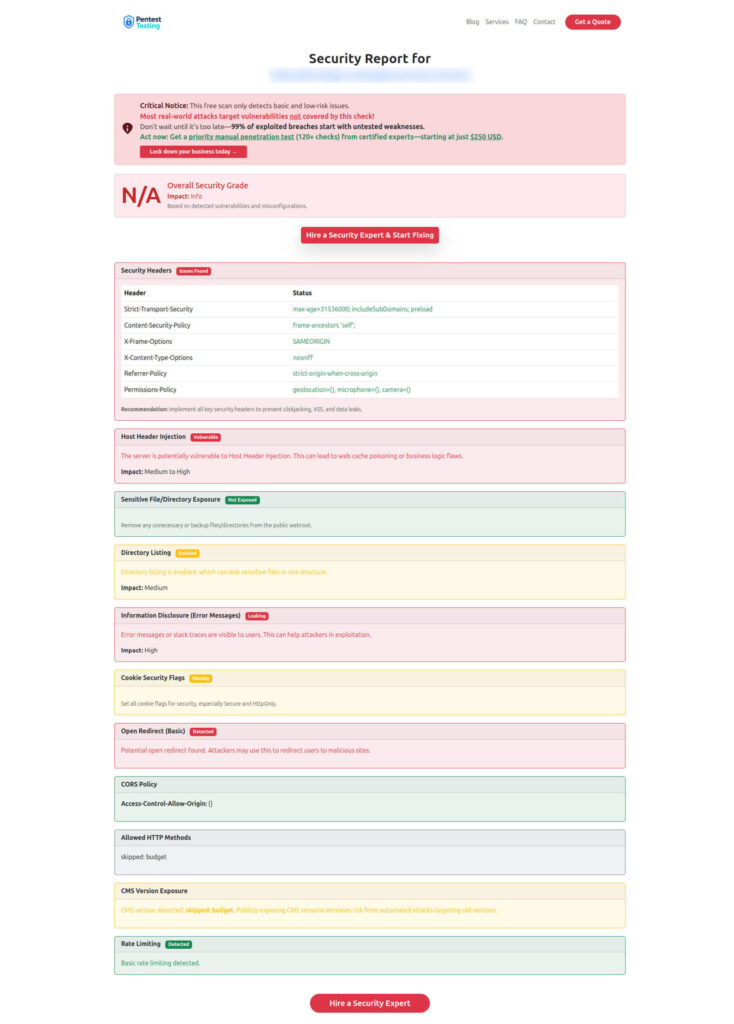

- Run a free external exposure sweep with our tool to catch easy wins. Convert findings into 30/60/90-day tasks.

Free Website Vulnerability Scanner – Landing Page

Day 6–20: Remediate data-sharing APIs (prove it with evidence)

Objectives: purpose binding, tenant isolation, least privilege, rate-limiting, change control, and retesting—mapped to clauses/evidence in your evidence pack.

1) Enforce purpose binding at the API layer

FastAPI example (Python) — annotate and enforce allowed purposes per client/app):

from fastapi import FastAPI, Depends, HTTPException, Request

app = FastAPI()

# Static registry or DB table mapping client_id -> allowed_purposes

CLIENT_PURPOSES = {

"partner_app_123": {"billing_reconciliation", "analytics_aggregated"},

"internal_tool_007": {"customer_support"}

}

def purpose_guard(request: Request):

client_id = request.headers.get("x-client-id")

purpose = request.headers.get("x-processing-purpose")

if not client_id or not purpose:

raise HTTPException(status_code=400, detail="Missing client or purpose")

allowed = CLIENT_PURPOSES.get(client_id, set())

if purpose not in allowed:

raise HTTPException(status_code=403, detail="Purpose not permitted")

return {"client_id": client_id, "purpose": purpose}

@app.get("/v1/invoices")

def list_invoices(ctx=Depends(purpose_guard)):

# downstream services can read ctx["purpose"] for audit logs & DLP

return {"status": "ok"}OpenAPI contract snippet (document the x-processing-purpose header):

openapi: 3.0.3

info:

title: Data-Sharing API

version: "1.0"

paths:

/v1/invoices:

get:

summary: List invoices

parameters:

- in: header

name: x-processing-purpose

required: true

schema:

type: string

enum: [billing_reconciliation, analytics_aggregated, customer_support]

description: Declares the allowed processing purpose for this request.

responses:

"200": { description: OK }2) Enforce tenant isolation at DB level (PostgreSQL RLS)

-- Precondition: each row has tenant_id; session sets current_setting('app.tenant_id')

ALTER TABLE invoice ENABLE ROW LEVEL SECURITY;

CREATE POLICY tenant_isolation ON invoice

USING (tenant_id = current_setting('app.tenant_id')::uuid);

-- App sets tenant_id per request (from verified token/claim)

-- SELECT set_config('app.tenant_id', '<tenant-uuid>', false);3) Rate limiting at the edge (NGINX)

# 100 requests/min per API key

limit_req_zone $http_x_api_key zone=api_key_zone:10m rate=100r/m;

server {

location /v1/ {

limit_req zone=api_key_zone burst=50 nodelay;

proxy_pass http://app_upstream;

}

}4) Change control with signed config and peer review (GitHub Actions gate)

name: change-control

on: [pull_request]

jobs:

enforce:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Verify signed commits

run: |

git verify-commit $(git rev-list --no-walk HEAD) || exit 1

- name: Require 2 approving reviews

run: |

gh pr view ${{ github.event.pull_request.number }} --json reviews | jq \

'.reviews | map(select(.state=="APPROVED")) | length' | grep -q '2'5) Retest and attach proof

- Re-scan public endpoints after each change (delta scans + manual checks).

- Export logs: “request purpose header,” “tenant id claim,” “rate-limit hits.”

- Save diffs (before/after) and attach test notes to your evidence pack.

Vuln Scan Report – Sample Findings to check Website Vulnerability

Day 21–40: Cloud switching & portability (prove you can exit)

Goals: pre-build exit runbooks, dependency maps, and export tests; capture configurations and logs reviewers will ask to see.

Exit runbook (YAML skeleton)

runbook: cloud-exit

scenarios:

- id: "primary-to-alt-region"

rpo: "5m"

rto: "30m"

steps:

- "Freeze writes; drain queues"

- "Export data (S3 + DB snapshot); verify checksums"

- "Rehydrate in alt region"

- "Flip read/write endpoints via DNS"

artifacts:

- "DB snapshot manifest + checksums"

- "S3 bucket inventory + lifecycle rules"

- "IAM diff before/after"

- "DNS cutover logs"IAM boundary for export jobs (Terraform)

resource "aws_iam_policy" "export_boundary" {

name = "export-boundary"

policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

"Sid": "S3ExportOnly",

"Effect": "Allow",

"Action": ["s3:PutObject", "s3:ListBucket", "s3:GetBucketLocation"],

"Resource": ["arn:aws:s3:::data-exports/*", "arn:aws:s3:::data-exports"]

},

{ "Sid": "DenyDangerous", "Effect": "Deny", "Action": ["s3:DeleteObject","s3:PutBucketPolicy"], "Resource": "*" }

]

})

}Kubernetes egress control for export jobs

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: export-egress

spec:

podSelector:

matchLabels: { job: data-export }

policyTypes: ["Egress"]

egress:

- to:

- namespaceSelector: { matchLabels: { name: "infra" } }

- ipBlock: { cidr: 0.0.0.0/0 } # optionally constrain

ports: [{ protocol: TCP, port: 443 }]Evidence you’ll want in the evidence pack

- Exit runbook + last success timestamp.

- Export logs, checksums, and object manifests.

- IAM & network policy diffs.

- Screenshots of a successful restore in the alternate target.

Day 41–60: Consolidate the Evidence Pack & prepare the retest

What to include (make it “reviewer-friendly”):

- Purpose-binding design, configs, and gateway rules.

- Tenant isolation proof: RLS policies + unit tests.

- Rate-limit configs + logs for throttled attempts.

- Change-control trail: PRs, approvals, signed commits.

- Cloud exit runbook + last validated drill output.

- Vulnerability scans and manual test notes from retests.

Auto-collect artifacts with a simple script:

#!/usr/bin/env bash

set -euo pipefail

OUT="evidence_pack_$(date +%F)"

mkdir -p "$OUT"

cp -r infra/policies "$OUT/"

cp -r api/openapi.yaml "$OUT/"

jq '.' logs/retests/*.json > "$OUT/retests.json"

git log --since="60 days ago" --pretty=oneline > "$OUT/change_log.txt"

tar -czf "$OUT.tgz" "$OUT"

echo "Evidence pack created: $OUT.tgz"Optional DAST gate on retest (GitHub Actions + ZAP baseline):

name: dast-retest

on:

workflow_dispatch:

jobs:

zap:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: zaproxy/[email protected]

with:

target: ${{ secrets.PUBLIC_BASE_URL }}

rules_file_name: ".zap/rules.tsv"

allow_issue_writing: false

- name: Archive ZAP report

uses: actions/upload-artifact@v4

with:

name: zap-report

path: report_html.htmlQuick start (today)

- Run a light external exposure scan: free.pentesttesting.com → triage headers, cookies, redirects, CORS, and basic leaks.

- Turn findings into a 30/60/90-day backlog mapped to EU Data Act remediation objectives.

- Book a structured risk assessment to prioritize work and align artifacts.

- Engage remediation services to accelerate fixes and assemble your evidence pack.

Services to help you execute (no guesswork)

- Risk Assessment Services — prioritize gaps and build a crisp roadmap (GDPR/ISO/SOC 2 friendly).

- Remediation Services — hands-on fixes with audit-ready artifacts (policy + technical).

- GDPR Risk Assessment & Remediation — if privacy overlaps dominate your scope.

Prefer a package approach for certification-aligned fixes? See ISO 27001 and SOC 2 remediation options as well.

30/60/90-Day Backlog Template (copy/paste)

30 days (critical):

- Purpose headers enforced on all data-sharing APIs; OpenAPI updated.

- RLS tenant isolation lives on the top-3 sensitive tables.

- Rate-limit policies deployed at the gateway + logs exported to SIEM.

- Exit runbook drafted; first data export smoke test done.

- Initial evidence pack folder seeded.

60 days (high):

- Purpose registry tied to client onboarding with approvals.

- Change-control gate (signed commits + 2 reviews) for API surfaces.

- Exit drill executed with restore validation in the alternate region.

- DAST baseline retest attached to PR merges.

90 days (maturing):

- Quarterly exit drill cadence + checksum attestation.

- Purpose-binding unit/integration tests in CI.

- Continuous external exposure checks feeding the risk register.

Further reading on our blog

- Top 7 Ways to Fix OAuth Misconfiguration in Laravel — practical auth hardening + scanner walk-through.

- Prevent XSSI Attack in Laravel with 7 Powerful Ways — client-side data safety patterns.

- AI App Security Audit: 7 VAPT Reveals & Fixes Critical Risks — real outcomes & artifacts.

Ready to move? (Next steps)

- Run the free scan now → free.pentesttesting.com (attach the results to your backlog).

- Book Risk Assessment Services to prioritize EU Data Act gaps.

- Engage Remediation Services to implement fixes and compile an evidence pack that your stakeholders will trust.

Questions or a tricky requirement you want sanity-checked? Email [email protected]—we’ll help you ship an audit-ready outcome.

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about U Data Act remediation, data-sharing API security & cloud switching compliance.